Deep Learning Dropout Concept And Tensorflow Implement

Deep Learning Dropout In this guide, we covered the concept of dropout, its benefits, and how to implement it using tensorflow on the mnist dataset. experiment with different dropout rates and architectures to see how dropout can help your deep learning models achieve better results. Introducing dropout concept in deep learning and implement it using tensorflow. you can practice with below github repository more.

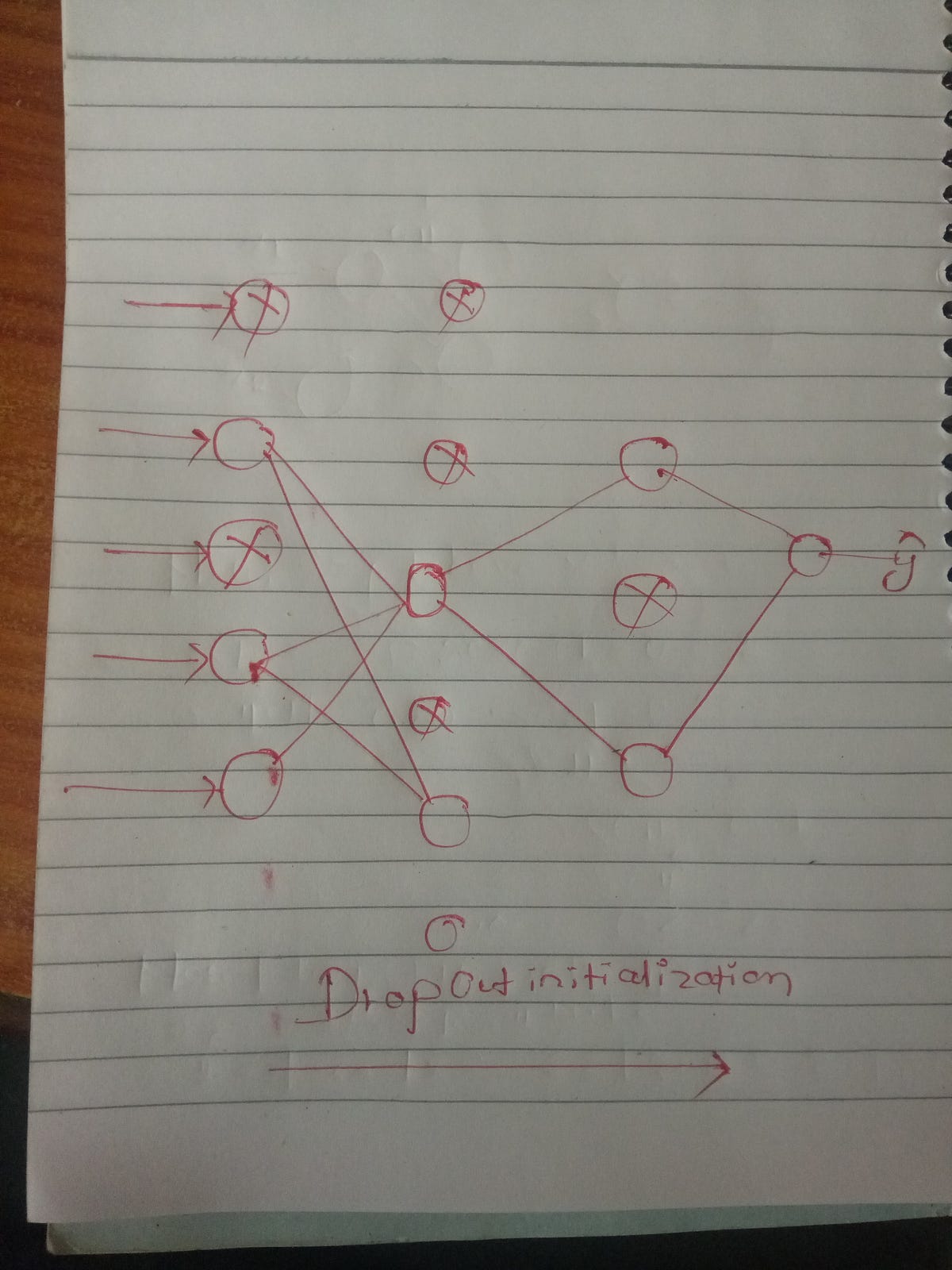

Dropout In Deep Learning Pdf In this article, we will uncover the concept of dropout in depth and look at how this technique can be implemented within neural networks using tensorflow and keras. What is the dropout layer in deep learning? the dropout layer is a unique innovation that addresses overfitting by selectively dropping out a fraction of neurons during each training iteration. In this era of deep learning, almost every data scientist must have used the dropout layer at some moment in their career of building neural networks. but, why dropout is so common? how does the dropout layer work internally? what is the problem that it solves? is there any alternative to dropout?. This code snippet demonstrates how to implement dropout layers in a keras tensorflow model. the dropout layer takes a single argument, the dropout rate, which is the probability of a neuron being dropped out.

Dropout In Deep Learning An Artificial Neural Network Is A By In this era of deep learning, almost every data scientist must have used the dropout layer at some moment in their career of building neural networks. but, why dropout is so common? how does the dropout layer work internally? what is the problem that it solves? is there any alternative to dropout?. This code snippet demonstrates how to implement dropout layers in a keras tensorflow model. the dropout layer takes a single argument, the dropout rate, which is the probability of a neuron being dropped out. We attempt to lessen the model's learning ability by utilizing a variety of regularization strategies in order to prevent this circumstance. one such regularization technique is dropout. when applied to unknown data, regularization ensures that the model operates as intended. While such an justification of this theory is certainly up for debate, the dropout technique itself has proved enduring, and various forms of dropout are implemented in most deep learning libraries. the key challenge is how to inject this noise. Let's break down the steps needed to implement dropout and regularization in a neural network using tensorflow. we will create a simple feedforward neural network and apply dropout regularization. Let’s build a simple neural network using tf.keras with dropout applied to prevent overfitting. the model architecture contains fully connected neural network and the dropout layer is used after hidden layers to reduce overfitting: layers.dropout (0.3): drops 30% of neurons in the first hidden layer.

Comments are closed.