Decoding Gpts Llms Training Memory Advanced Architectures

Decoding Gpts Llms Training Memory Advanced Architectures Pre training & fine tuning methods: learn how gpts and llms are trained on vast datasets to grasp language patterns and how fine tuning tailors them for specific tasks. Context window in ai: explore the concept of the context window, which acts as a short term memory for llms, influencing how they process and respond to information.

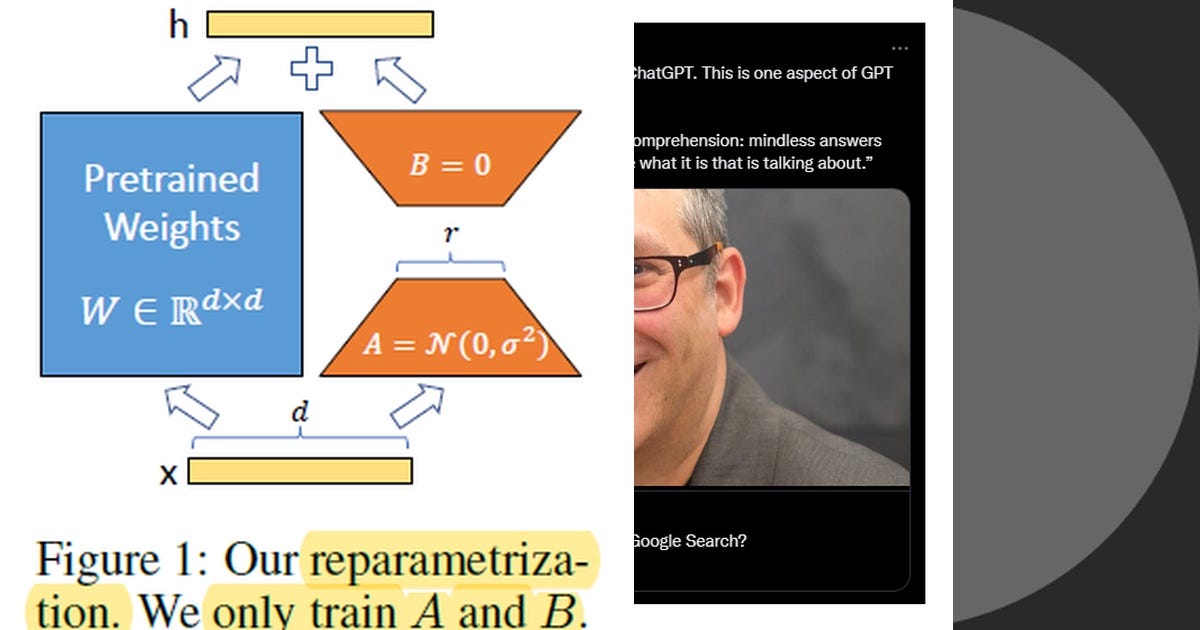

Advanced Gpts With Actions Integrate 3rd Party Llms Via Openapi By In today’s episode, we’ll cover gpts and llms, their pre training and fine tuning methods, their context window and lack of long term memory, architectures that query databases, pdf app’s use of near realtime fine tuning, and the book “ai unraveled” which answers faqs about ai. Pre training & fine tuning methods: learn how gpts and llms are trained on vast datasets to grasp language patterns and how fine tuning tailors them for specific tasks. The dominant architecture underpinning most state of the art llms is the transformer. introduced in the 2017 paper “attention is all you need,” the transformer architecture revolutionized natural language processing by relying on the concept of self attention. Large language models (llms) like gpt 4 excel in nlp tasks through advanced architectures, including encoder decoder, causal decoder, and prefix decoder. this article delves into their configurations, activation functions, and training stability for optimal performance.

List Gpts And Llms Curated By Daniel Brown Medium The dominant architecture underpinning most state of the art llms is the transformer. introduced in the 2017 paper “attention is all you need,” the transformer architecture revolutionized natural language processing by relying on the concept of self attention. Large language models (llms) like gpt 4 excel in nlp tasks through advanced architectures, including encoder decoder, causal decoder, and prefix decoder. this article delves into their configurations, activation functions, and training stability for optimal performance. In this chapter, we’ll dive into the evolution of large language models (llms), starting from the basics of transformers to the rise of popular models like llama, gpt, and claude. we’ll. To conduct the knowledge acquisition experiment, we use three series of typical decoder only llms to yield consistent findings on different architectures: gpt 2, llama, and phi. A large language model (llm) is a language model trained with self supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. the largest and most capable llms are generative pretrained transformers (gpts), which are largely used in generative chatbots such as chatgpt, gemini and claude. llms can be fine tuned for. Understand the intricacies of how these ai models learn through pre training and fine tuning, their operational scope within a context window, and the intriguing aspect of their lack of long term memory.

What Are Neural Networks Llms Gpts Apkhore News In this chapter, we’ll dive into the evolution of large language models (llms), starting from the basics of transformers to the rise of popular models like llama, gpt, and claude. we’ll. To conduct the knowledge acquisition experiment, we use three series of typical decoder only llms to yield consistent findings on different architectures: gpt 2, llama, and phi. A large language model (llm) is a language model trained with self supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. the largest and most capable llms are generative pretrained transformers (gpts), which are largely used in generative chatbots such as chatgpt, gemini and claude. llms can be fine tuned for. Understand the intricacies of how these ai models learn through pre training and fine tuning, their operational scope within a context window, and the intriguing aspect of their lack of long term memory.

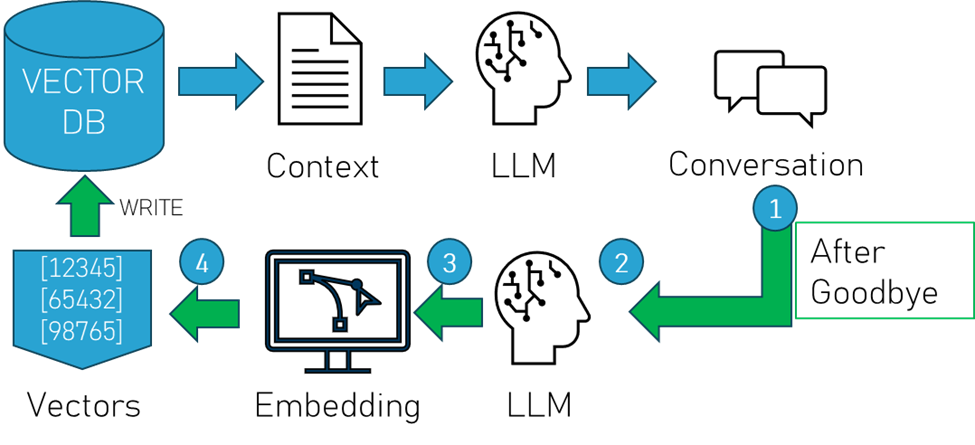

Memory For Open Source Llms Pinecone A large language model (llm) is a language model trained with self supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. the largest and most capable llms are generative pretrained transformers (gpts), which are largely used in generative chatbots such as chatgpt, gemini and claude. llms can be fine tuned for. Understand the intricacies of how these ai models learn through pre training and fine tuning, their operational scope within a context window, and the intriguing aspect of their lack of long term memory.

Comments are closed.