Databricks Automl First Look Auto Generate Auto Tuned Jupyter Ml Notebooks In Python

Regression With Automl Databricks On Aws There is a lot of confusion wrt the use of parameters in sql, but i see databricks has started harmonizing heavily (for example, 3 months back, identifier () didn't work with catalog, now it does). check my answer for a working solution. Databricks is smart and all, but how do you identify the path of your current notebook? the guide on the website does not help. it suggests: %scala dbutils.notebook.getcontext.notebookpath res1:.

Automl Toolkit Setup Py At Master Databrickslabs Automl Toolkit Github It's not possible, databricks just scans entire output for occurences of secret values and replaces them with " [redacted]". it is helpless if you transform the value. for example, like you tried already, you could insert spaces between characters and that would reveal the value. you can use a trick with an invisible character for example unicode invisible separator, which is encoded as. I am trying to convert a sql stored procedure to databricks notebook. in the stored procedure below 2 statements are to be implemented. here the tables 1 and 2 are delta lake tables in databricks c. In python, delta live tables determines whether to update a dataset as a materialized view or streaming table based on the defining query. the @table decorator is used to define both materialized views and streaming tables. to define a materialized view in python, apply @table to a query that performs a static read against a data source. to define a streaming table, apply @table to a query. Actually, without using shutil, i can compress files in databricks dbfs to a zip file as a blob of azure blob storage which had been mounted to dbfs. here is my sample code using python standard libraries os and zipfile.

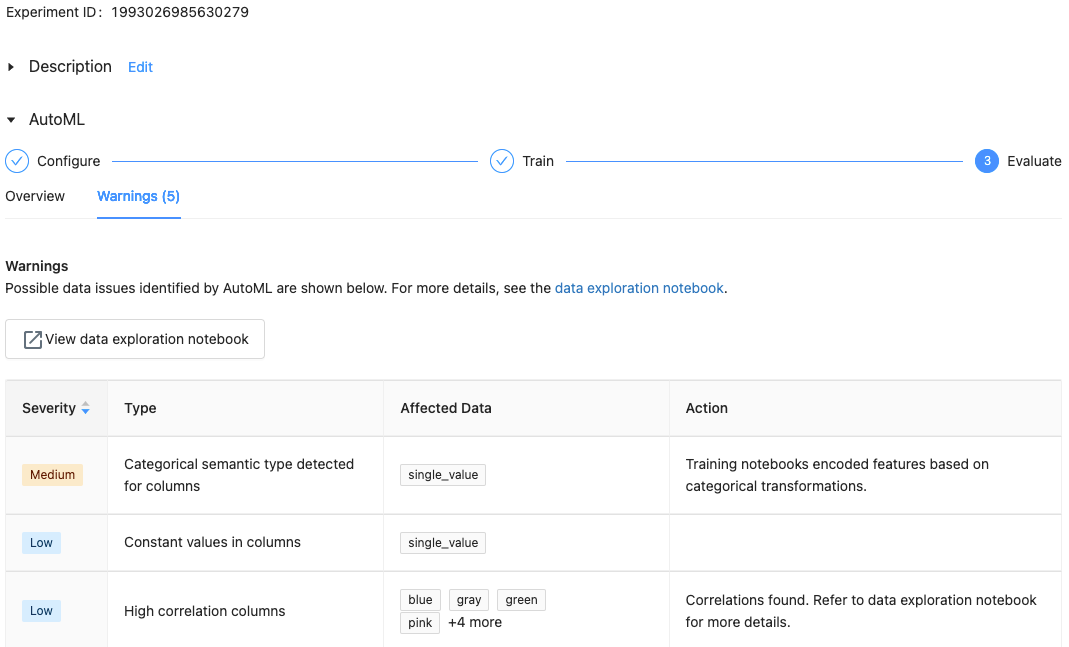

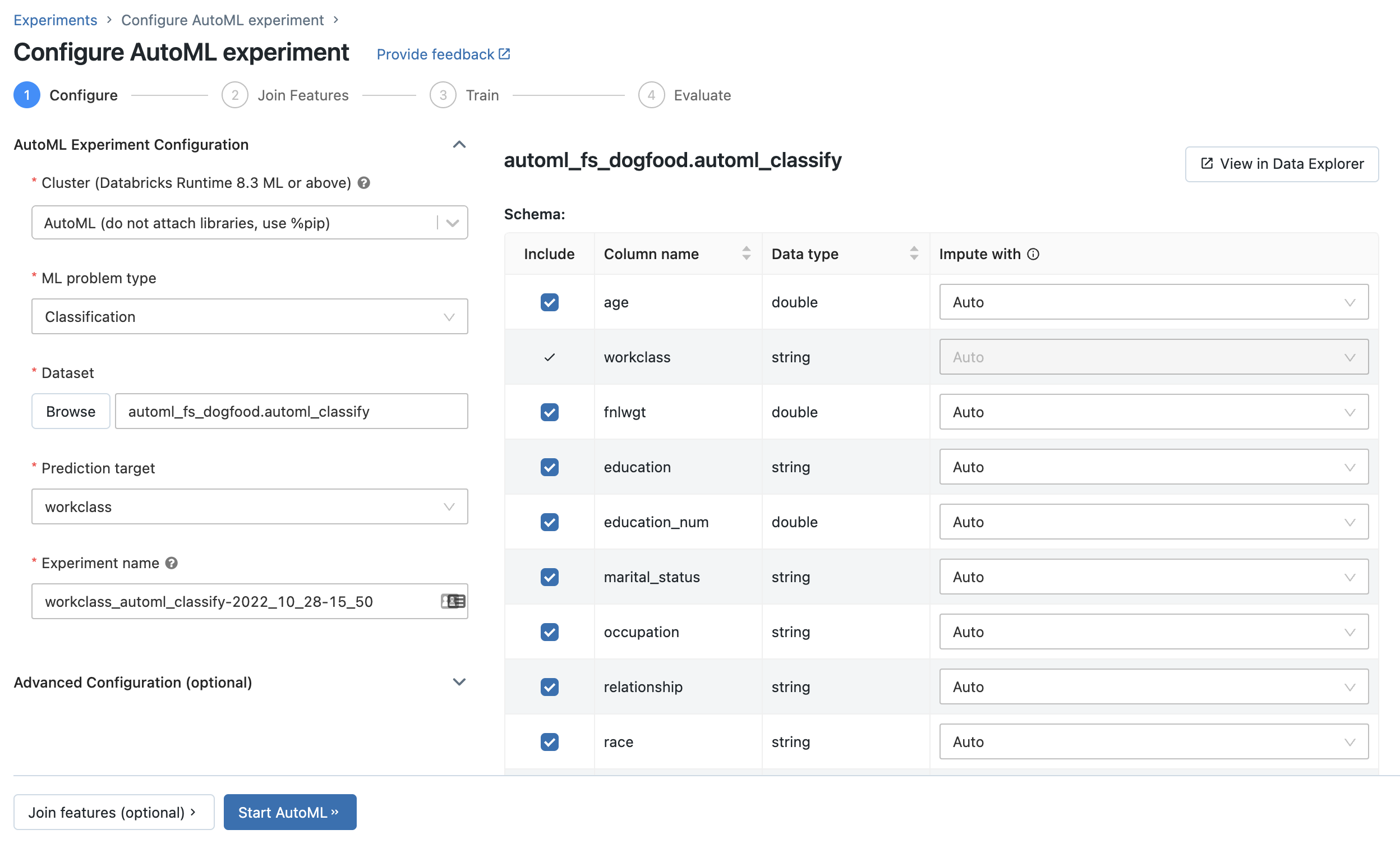

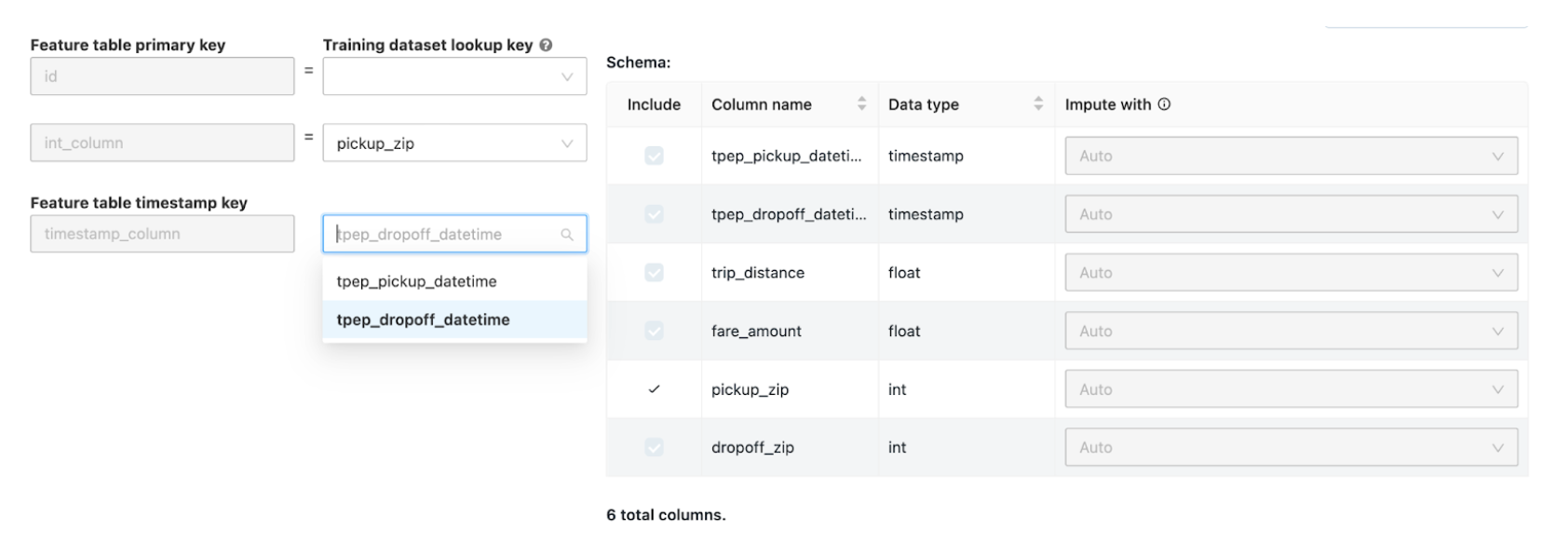

Train Ml Models With The Databricks Automl Ui Databricks On Aws In python, delta live tables determines whether to update a dataset as a materialized view or streaming table based on the defining query. the @table decorator is used to define both materialized views and streaming tables. to define a materialized view in python, apply @table to a query that performs a static read against a data source. to define a streaming table, apply @table to a query. Actually, without using shutil, i can compress files in databricks dbfs to a zip file as a blob of azure blob storage which had been mounted to dbfs. here is my sample code using python standard libraries os and zipfile. Saving a file locally in databricks pyspark asked 7 years, 11 months ago modified 2 years, 1 month ago viewed 24k times. Installing multiple libraries 'permanently' on databricks' cluster asked 1 year, 5 months ago modified 1 year, 5 months ago viewed 4k times. I want to be able to create an external table in unity catalog (via azure databricks) using this location. an external location has already been created by pointing to the main storage container within the storage account. Method3: using third party tool named dbfs explorer dbfs explorer was created as a quick way to upload and download files to the databricks filesystem (dbfs). this will work with both aws and azure instances of databricks. you will need to create a bearer token in the web interface in order to connect.

Train Ml Models With The Databricks Automl Ui Databricks On Aws Saving a file locally in databricks pyspark asked 7 years, 11 months ago modified 2 years, 1 month ago viewed 24k times. Installing multiple libraries 'permanently' on databricks' cluster asked 1 year, 5 months ago modified 1 year, 5 months ago viewed 4k times. I want to be able to create an external table in unity catalog (via azure databricks) using this location. an external location has already been created by pointing to the main storage container within the storage account. Method3: using third party tool named dbfs explorer dbfs explorer was created as a quick way to upload and download files to the databricks filesystem (dbfs). this will work with both aws and azure instances of databricks. you will need to create a bearer token in the web interface in order to connect.

Auto Ml What Why When And Open Source Packages Analytics Vidhya I want to be able to create an external table in unity catalog (via azure databricks) using this location. an external location has already been created by pointing to the main storage container within the storage account. Method3: using third party tool named dbfs explorer dbfs explorer was created as a quick way to upload and download files to the databricks filesystem (dbfs). this will work with both aws and azure instances of databricks. you will need to create a bearer token in the web interface in order to connect.

Auto Ml What Why When And Open Source Packages Analytics Vidhya

Comments are closed.