Cross Validation In Machine Learning The Ultimate Guide

K Fold Cross Validation Dataaspirant Throughout the world, images of the cross adorn the walls and steeples of churches. for some christians, the cross is part of their daily attire worn around their necks. sometimes the cross even adorns the body of a christian in permanent ink. in egypt, among other countries, for example, christians wear a tattoo of the cross on their wrists. and for some christians, each year during the. Gospel accounts of jesus’s execution do not specify how exactly jesus was secured to the cross. yet in christian tradition, jesus had his palms and feet pierced with nails. even though roman execution methods did include crucifixion with nails, some scholars believe this method only developed after jesus’s lifetime.

Guide To Cross Validation In Machine Learning Geologists examined soil depositions to identify two earthquakes and compared their findings with biblical information about jesus' crucifixion. Cross’s reading of the inscriptions, when coupled with the pottery, bones, botany, and architecture, made the interpretation of this complex as a marketplace extremely compelling. near the “counting house” lay an ostracon recording the sale of grain. the verb in this inscription could be used of the payment in silver. Introduction training a binary classifier on a small and imbalanced dataset (220 samples, 58 positives) poses some challenges in ensuring robust model evaluation and generalisation. this response will address: correctness of your nested cv pipeline. appropriateness of bootstrap confidence intervals for performance metrics. implications of feature selection within folds. additional. Cross attention mask: similarly to the previous two, it should mask input that the model "shouldn't have access to". so for a translation scenario, it would typically have access to the entire input and the output generated so far. so, it should be a combination of the causal and padding mask. 👏 well written question, by the way.

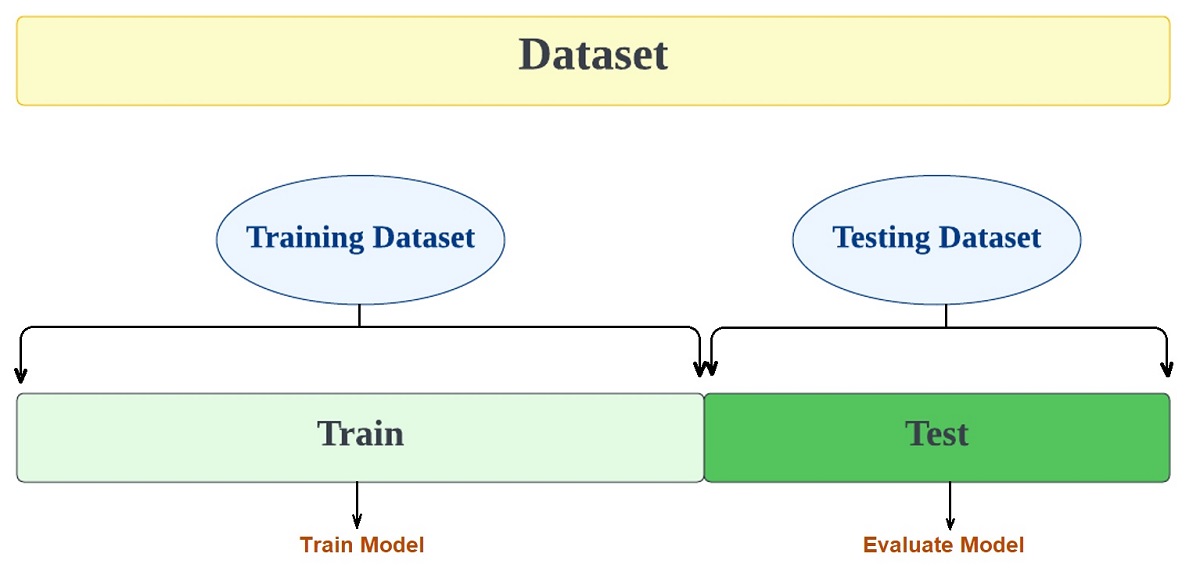

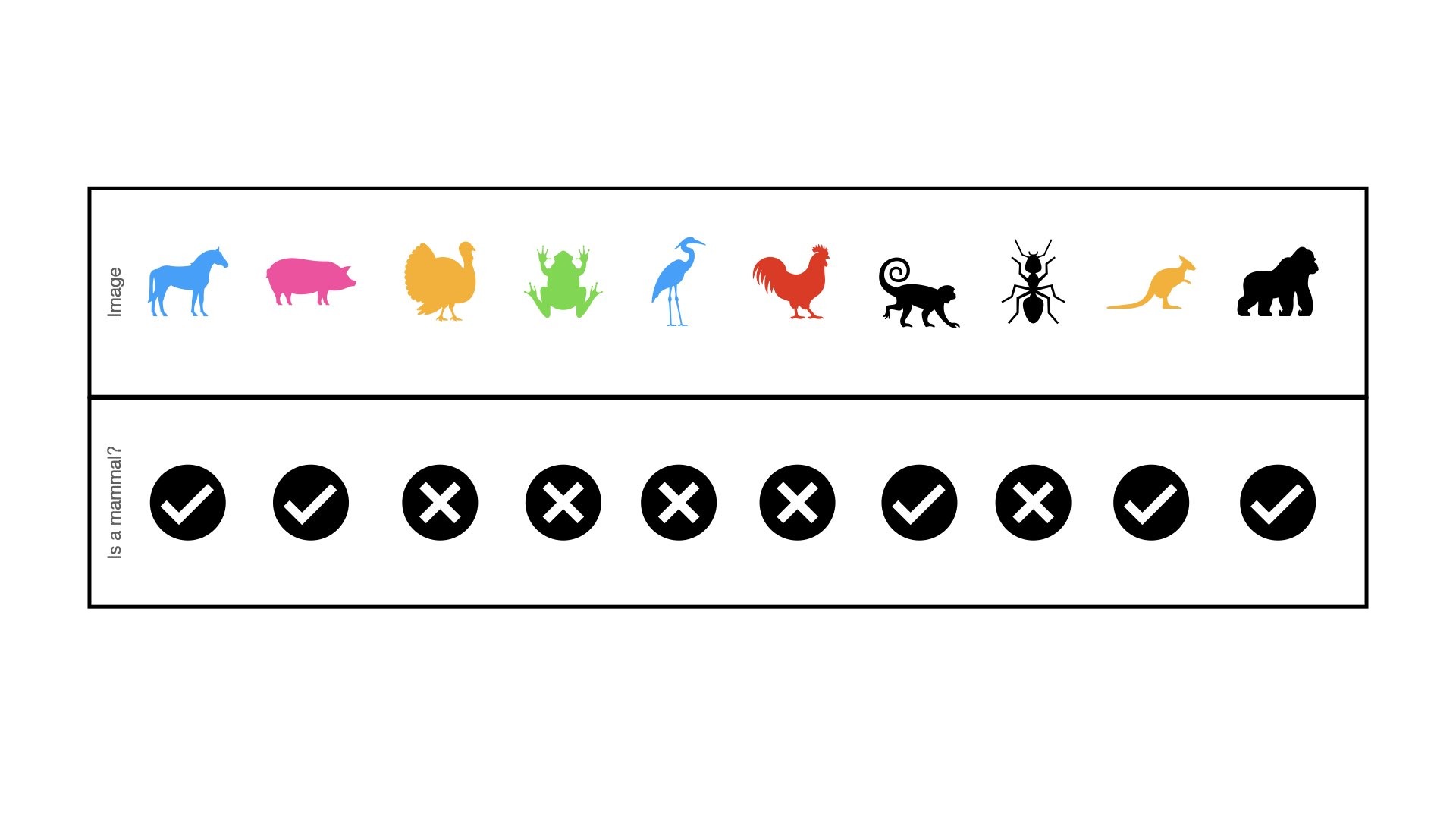

Guide To Cross Validation In Machine Learning Introduction training a binary classifier on a small and imbalanced dataset (220 samples, 58 positives) poses some challenges in ensuring robust model evaluation and generalisation. this response will address: correctness of your nested cv pipeline. appropriateness of bootstrap confidence intervals for performance metrics. implications of feature selection within folds. additional. Cross attention mask: similarly to the previous two, it should mask input that the model "shouldn't have access to". so for a translation scenario, it would typically have access to the entire input and the output generated so far. so, it should be a combination of the causal and padding mask. 👏 well written question, by the way. I'm trying to implement a binary classification model using tensorflow keras and stumbled over problem that i cannot grasp. my model shall classify images of houses in the two classes of "old. Blocked time series cross validation is very much like traditional cross validation. as you know cv, takes a portion of the dataset and sets it aside only for testing purposes. the data can be taken from any part of the original data, beginning, middle, end, etc. it does not matter where because you assume the variance is the same throughout. I'm trying to describe mathematically how stochastic gradient descent could be used to minimize the binary cross entropy loss. the typical description of sgd is that i can find online is: $\\theta = \\. A common confusion is to assume that cross validation is similar to a regular training stage and therefore produces a model. this assumption is wrong: cv includes repeated training testing for the purpose of evaluating the method parameters.

A Complete Introduction To Cross Validation In Machine Learning I'm trying to implement a binary classification model using tensorflow keras and stumbled over problem that i cannot grasp. my model shall classify images of houses in the two classes of "old. Blocked time series cross validation is very much like traditional cross validation. as you know cv, takes a portion of the dataset and sets it aside only for testing purposes. the data can be taken from any part of the original data, beginning, middle, end, etc. it does not matter where because you assume the variance is the same throughout. I'm trying to describe mathematically how stochastic gradient descent could be used to minimize the binary cross entropy loss. the typical description of sgd is that i can find online is: $\\theta = \\. A common confusion is to assume that cross validation is similar to a regular training stage and therefore produces a model. this assumption is wrong: cv includes repeated training testing for the purpose of evaluating the method parameters.

Comments are closed.