Core Dumped On Trying To Import From Llama Cpp Module When Built With

Github Yoshoku Llama Cpp Rb Llama Cpp Provides Ruby Bindings For When building the same source code of llama.cpp with cmake instead, using the following line, the binaries (like main) will throw the core dump error just when starting it. I am trying to write a simple application that uses llama.cpp and i am including it to my application using cmake and vcpkg. my cmakelist.txt is: cmake minimum required (version 4.0) if (win32) f.

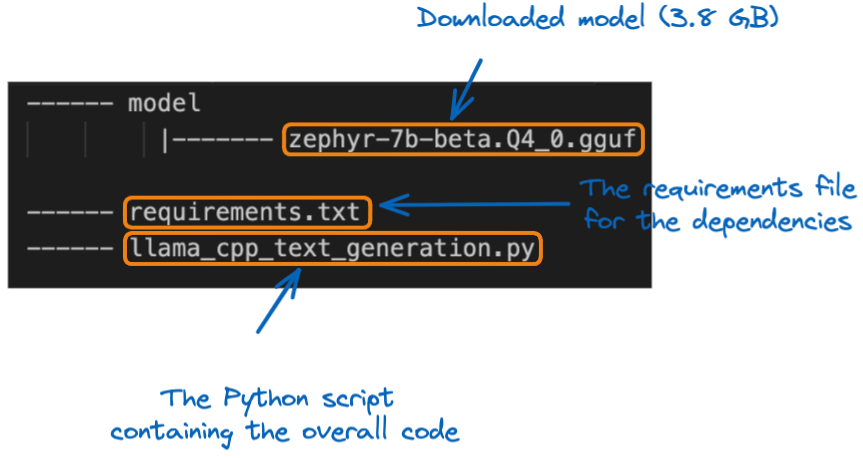

Llama Cpp Tutorial A Complete Guide To Efficient Llm Inference And That example you used there, ggml gpt4all j v1.3 groovy.bin is a gpt j model that is not supported with llama.cpp, even if it was updated to latest ggmlv3 which it likely isn't. Illegal instruction (core dumped) this also affects installing llama cpp python using pip (with force cmake=1) and trying to use it or even trying to import a module into python. Apologies, the originally uploaded ggufs had an error. please try re downloading; the newly uploaded ggufs are confirmed to work with latest llama.cpp. i've not tested them with llama cpp python, but i would hope they should work there too. In this guide, we’ll walk you through installing llama.cpp, setting up models, running inference, and interacting with it via python and http apis.

Core Dumped On Trying To Import From Llama Cpp Module When Built With Apologies, the originally uploaded ggufs had an error. please try re downloading; the newly uploaded ggufs are confirmed to work with latest llama.cpp. i've not tested them with llama cpp python, but i would hope they should work there too. In this guide, we’ll walk you through installing llama.cpp, setting up models, running inference, and interacting with it via python and http apis. So i rolled back to version 0.3.6, which uses an older llama.cpp build, and now i'm able to run any model, such as the llama3.1 400b model, without any problems. Try using the "cat" command with the " n" option to ensure each file starts with a newline character, as the " n" flag prevents the "cat" command from adding a trailing newline to the final merged file. To ease the transition, this change instructs users to call ggml backend load () or ggml backend load all () to load a backend when trying to load a model while there are no backends loaded. That is a c error likely from llama.cpp. does the same model work with llama.cpp?.

Comments are closed.