Cognitively Inspired Cross Modal Data Generation Using Diffusion Models

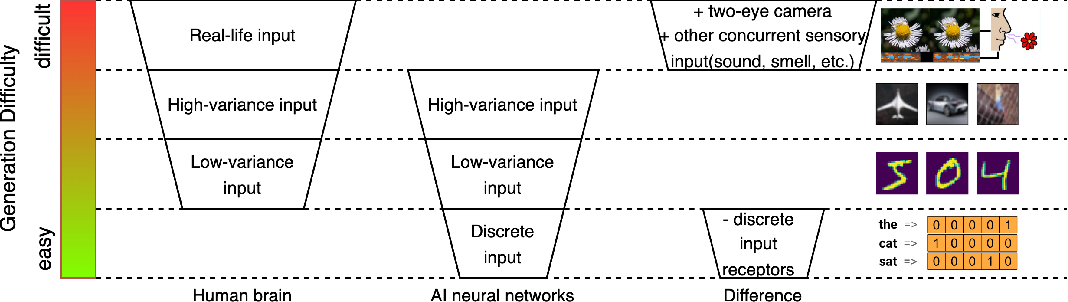

Cognitively Inspired Cross Modal Data Generation Using Diffusion Models Most existing cross modal generative methods based on diffusion models use guidance to provide control over the latent space to enable conditional generation across different modalities. such methods focus on providing guidance through separately trained models, each for one modality. Bibliographic details on cognitively inspired cross modal data generation using diffusion models.

Pdf Cognitively Inspired Cross Modal Data Generation Using Diffusion Inspired by how humans synchronously acquire multi modal information and learn the correlation between modalities, we explore a multi modal diffusion model training and sampling scheme that. Abstract single cell multi omics data have a high potential for deciphering complex cellular mechanisms. but simultaneously measuring multi omics data from the same cells is still challenging, which calls for computational methods to integrate data of multiple modalities and generate unobserved data. in this paper, we present scdiffusion x, a. We use the diffusion model to perform data augmentation on the user item interaction graph. measure user modality preferences by considering both the number of valid samples and data richness. introduces a modality aware gnn to aggregate multimodal interactions and capture deep user preferences. Can we generalize diffusion models with the ability of multi modal generative training for more generalizable modeling? in this paper, we propose a principled way to define a diffusion model by constructing a unified multi modal diffusion model in a common diffusion space.

Discrete Contrastive Diffusion For Cross Modal And Conditional We use the diffusion model to perform data augmentation on the user item interaction graph. measure user modality preferences by considering both the number of valid samples and data richness. introduces a modality aware gnn to aggregate multimodal interactions and capture deep user preferences. Can we generalize diffusion models with the ability of multi modal generative training for more generalizable modeling? in this paper, we propose a principled way to define a diffusion model by constructing a unified multi modal diffusion model in a common diffusion space. Abstract most existing cross modal generative methods based on diffusion models use guidance to provide control over the latent space to enable conditional generation across different modalities. such methods focus on providing guidance through separately trained models, each for one modality. This work explores a multi modal diffusion model training and sampling scheme that uses channel wise image conditioning to learn cross modality correlation during the training phase to better mimic the learning process in the brain. We propose a novel and general cross modal contextualized diffusion model (contextdiff) that harnesses cross modal context to facilitate the learning capacity of cross modal diffusion models, including text to image generation, and text guided video editing. This paper presents a novel framework for collaborative generation across text, image, and audio modalities using an enhanced diffusion model architecture.

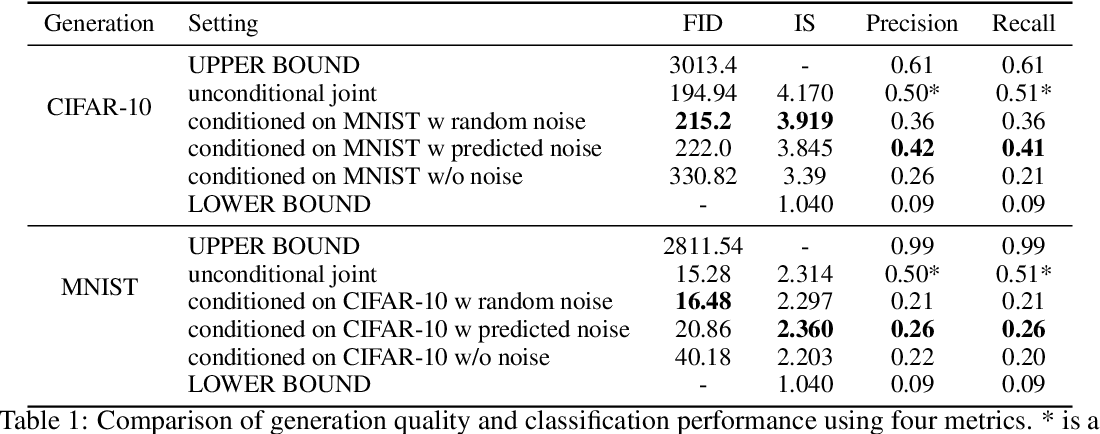

Table 1 From Cognitively Inspired Cross Modal Data Generation Using Abstract most existing cross modal generative methods based on diffusion models use guidance to provide control over the latent space to enable conditional generation across different modalities. such methods focus on providing guidance through separately trained models, each for one modality. This work explores a multi modal diffusion model training and sampling scheme that uses channel wise image conditioning to learn cross modality correlation during the training phase to better mimic the learning process in the brain. We propose a novel and general cross modal contextualized diffusion model (contextdiff) that harnesses cross modal context to facilitate the learning capacity of cross modal diffusion models, including text to image generation, and text guided video editing. This paper presents a novel framework for collaborative generation across text, image, and audio modalities using an enhanced diffusion model architecture.

Table 1 From Cognitively Inspired Cross Modal Data Generation Using We propose a novel and general cross modal contextualized diffusion model (contextdiff) that harnesses cross modal context to facilitate the learning capacity of cross modal diffusion models, including text to image generation, and text guided video editing. This paper presents a novel framework for collaborative generation across text, image, and audio modalities using an enhanced diffusion model architecture.

Comments are closed.