Class Aware Visual Prompt Tuning For Vision Language Pre Trained Model

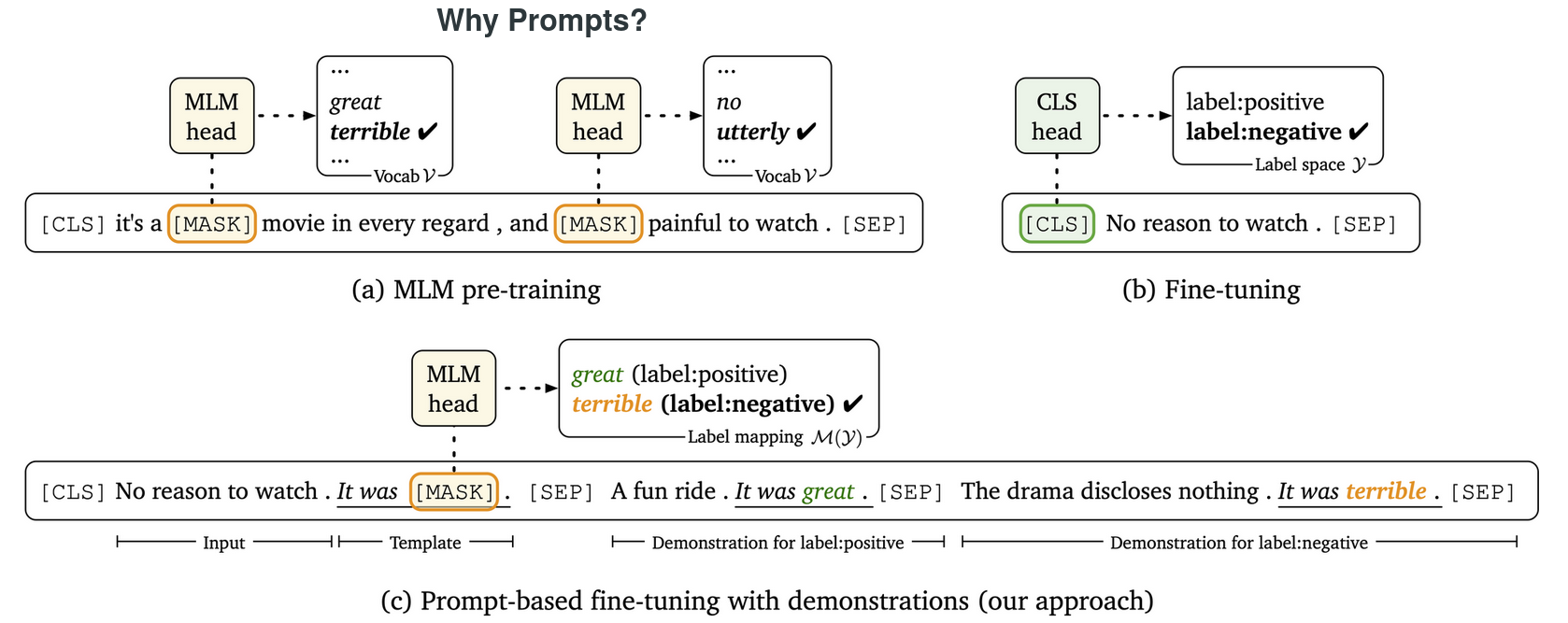

Class Aware Visual Prompt Tuning For Vision Language Pre Trained Model Our method provides a new paradigm for tuning the large pre trained vision language model and extensive experimental results on 8 datasets demonstrate the effectiveness of the proposed method. our code is available in the supplementary materials. To tackle this issue, we present a novel textual based class aware prompt tuning(tcp) that explicitly incorporates prior knowledge about classes to en hance their discriminability.

Table From Prompting Large Pre Trained Vision Language Models For My In this article, we propose a novel dual modality prompt tuning (dpt) paradigm through learning text and visual prompts simultaneously. to make the final image feature concentrate more on the target visual concept, a class aware visual prompt tuning (cavpt) scheme is further proposed in our dpt. Our method provides a new paradigm for tuning the large pre trained vision language model and extensive experimental results on 8 datasets demonstrate the effectiveness of the proposed method. In this article, we propose a novel dual modality prompt tuning (dpt) paradigm through learning text and visual prompts simultaneously. to make the final image feature concentrate more on the target visual concept, a class aware visual prompt tuning (cavpt) scheme is further proposed in our dpt. Fig. 1. cutcp overall framework. combines custom prompts generated by large language models, learning class aware prompt features through a knowledge embedding module.

Improving Visual Prompt Tuning For Self Supervised Vision Transformers In this article, we propose a novel dual modality prompt tuning (dpt) paradigm through learning text and visual prompts simultaneously. to make the final image feature concentrate more on the target visual concept, a class aware visual prompt tuning (cavpt) scheme is further proposed in our dpt. Fig. 1. cutcp overall framework. combines custom prompts generated by large language models, learning class aware prompt features through a knowledge embedding module. To answer the above mentioned key questions, we propose class aware prompt tuning (capt) for feder ated long tailed learning, leveraging a pre trained vision language model. To facilitate the pre trained clip model to learn and represent more effective features, we design a dual grained visual prompt scheme to learn global discrepancies as well as specify the subtle discriminative details among visual classes, and transform random vectors with class names in class aware text prompt into class specific discrepancy. To tackle this issue, we present a novel textual based class aware prompt tuning (tcp) that explicitly incorporates prior knowledge about classes to enhance their discriminability. To tackle this issue we present a novel textual based class aware prompt tuning (tcp) that explicitly incorporates prior knowledge about classes to enhance their discriminability.

Comments are closed.