Cannot Trainning In Multi Gpu Issue 6 Albinzhu Yolov7 Polygon

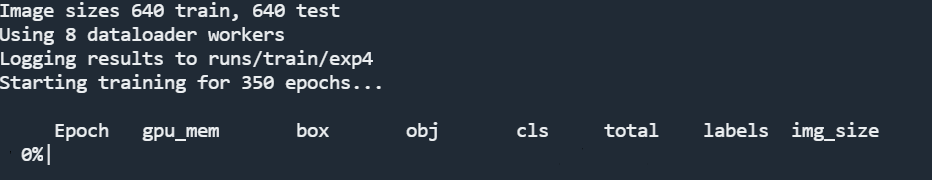

Cannot Trainning In Multi Gpu Issue 6 Albinzhu Yolov7 Polygon Unfortunately, i haven't solved this problem either. i recommend you to use yolov8 pose as an alternative. 我没有做太多的测试,所以无法回答这个问题。 理论上只是换了backbone 可能是一些算子的影响。. I am using yolov7 to run a training session for custom object detection. my environment is as follows: os: ubuntu 22.04 python : 3.10 torch version : '2.1.0 cu121' i am using aws ec2 g5.2xlarge.

Cannot Trainning In Multi Gpu Issue 6 Albinzhu Yolov7 Polygon For anyone looking it in the future, i solved my issue by making sure cuda is on the path. We are encountering a problem during training, which i believe is related to the dataloader. during training, gpu utilization sometimes drops to 0% and at other times increases to 50% 70%, resulting in very long iteration times. When i attempt to run multi gpu training, it seems to load the model onto the gpus (can tell from nvidia smi), but hangs before actually training. i've been able to confirm that the command i'm using works for multi gpu training on a 4x v100 system, so i believe that the command is correct. Test a basic training run on a single gpu (which you've already done successfully —great!). re run the multi gpu command with reduced batch sizes or configurations to see if the issue is hardware limitation related.

Cannot Trainning In Multi Gpu Issue 6 Albinzhu Yolov7 Polygon When i attempt to run multi gpu training, it seems to load the model onto the gpus (can tell from nvidia smi), but hangs before actually training. i've been able to confirm that the command i'm using works for multi gpu training on a 4x v100 system, so i believe that the command is correct. Test a basic training run on a single gpu (which you've already done successfully —great!). re run the multi gpu command with reduced batch sizes or configurations to see if the issue is hardware limitation related. It would mean a lot to me if someone could answer my questions. because i do not currently have 2 gpu's and i cant really figure it out by trial and failure so someone who was able to train a model with more than 1 gpu please shed some light. thank you. I’m encountering an issue when trying to run distributed training using the accelerate library from huggingface. the training process freezes after the dataloader initialization when using multiple gpus, but works fine on a single gpu. To address this issue, you can try training with sync bn or disable nccl timeout by setting nccl debug=version. additionally, you can also try specifying local rank during the validation, which should ensure that data parallelism is properly established across the two gpus. When training multiple yolo models on different gpus in aws or remote servers, using tmux ensures that the training continues even if the ssh connection is lost.

Github Albinzhu Yolov7 Polygon Detection Yolov7 Polygon Detection It would mean a lot to me if someone could answer my questions. because i do not currently have 2 gpu's and i cant really figure it out by trial and failure so someone who was able to train a model with more than 1 gpu please shed some light. thank you. I’m encountering an issue when trying to run distributed training using the accelerate library from huggingface. the training process freezes after the dataloader initialization when using multiple gpus, but works fine on a single gpu. To address this issue, you can try training with sync bn or disable nccl timeout by setting nccl debug=version. additionally, you can also try specifying local rank during the validation, which should ensure that data parallelism is properly established across the two gpus. When training multiple yolo models on different gpus in aws or remote servers, using tmux ensures that the training continues even if the ssh connection is lost.

Yolov8 Multi Gpu The Power Of Multi Gpu Training Yolov8 To address this issue, you can try training with sync bn or disable nccl timeout by setting nccl debug=version. additionally, you can also try specifying local rank during the validation, which should ensure that data parallelism is properly established across the two gpus. When training multiple yolo models on different gpus in aws or remote servers, using tmux ensures that the training continues even if the ssh connection is lost.

Comments are closed.