Can Not Load Local Model Directly Into Docker Issue 1113

Docker Installation Error General Docker Community Forums My flan t5 large model has been finetuned locally, stored locally. i would expect to be able to simply load it locally. please help. First, when i try to send a query to local.ai's chat ui page, none of my video cards are tasked. after reviewing the logs, it appears that the models are failing to load.

Docker Build Error On Windows General Docker Community Forums Use a custom entrypoint script to download the model when a container is launched. the model will be persisted in the volume mount, so this will go quickly with subsequent starts. In this guide, we’ll cover everything you need to know to use docker model runner in real world development workflows, including how to run models locally, configure docker desktop, connect from node.js apps, use docker compose for orchestration, and follow best practices. Hello! i’m working on project that requires me to deploy a locally store model on an air gapped server. i’m using the tgi docker container (with podman). i’m used to ollama, so initially attempted to load the local mode…. I'm encountering issues while trying to set up the local ai cpu version in a docker container on my ubuntu server 24.04. i've been following the official documentation and apfelcast's guide, but i'm unable to get the chat feature to work.

Error Load Metadata For Docker Io Library Ubuntu 20 04 Docker Hub Hello! i’m working on project that requires me to deploy a locally store model on an air gapped server. i’m using the tgi docker container (with podman). i’m used to ollama, so initially attempted to load the local mode…. I'm encountering issues while trying to set up the local ai cpu version in a docker container on my ubuntu server 24.04. i've been following the official documentation and apfelcast's guide, but i'm unable to get the chat feature to work. So i figured i could ask here since it is transformers roberta related. i am so puzzled as to why this stops working in docker and works locally…usually it’s the other way around! haha. what other information can i help provide to be able to debug this, more than happy to promptly send over. Learn how to fix docker image import errors quickly. troubleshoot local image import issues with our step by step guide and solutions. Obviously, the model folder is right there. can anyone tell how to mount local model files into vllm docker image?. Your current environment docker image vllm openai version v1.10.1 🐛 describe the bug loading a model from local s3 causes the following error on version v1.10.1:.

Error Load Metadata For Docker Io Library Ubuntu 20 04 Docker Hub So i figured i could ask here since it is transformers roberta related. i am so puzzled as to why this stops working in docker and works locally…usually it’s the other way around! haha. what other information can i help provide to be able to debug this, more than happy to promptly send over. Learn how to fix docker image import errors quickly. troubleshoot local image import issues with our step by step guide and solutions. Obviously, the model folder is right there. can anyone tell how to mount local model files into vllm docker image?. Your current environment docker image vllm openai version v1.10.1 🐛 describe the bug loading a model from local s3 causes the following error on version v1.10.1:.

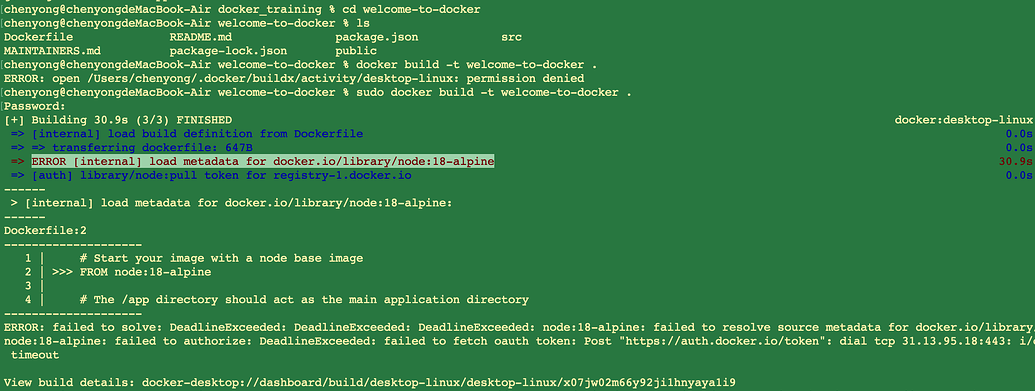

Help On Error Internal Load Metadata For Docker Io Library Node 18 Obviously, the model folder is right there. can anyone tell how to mount local model files into vllm docker image?. Your current environment docker image vllm openai version v1.10.1 🐛 describe the bug loading a model from local s3 causes the following error on version v1.10.1:.

Error Running Latest Docker Image Issue 106 Docker Library Docker

Comments are closed.