Can I Use Hugging Face Models As Container Images

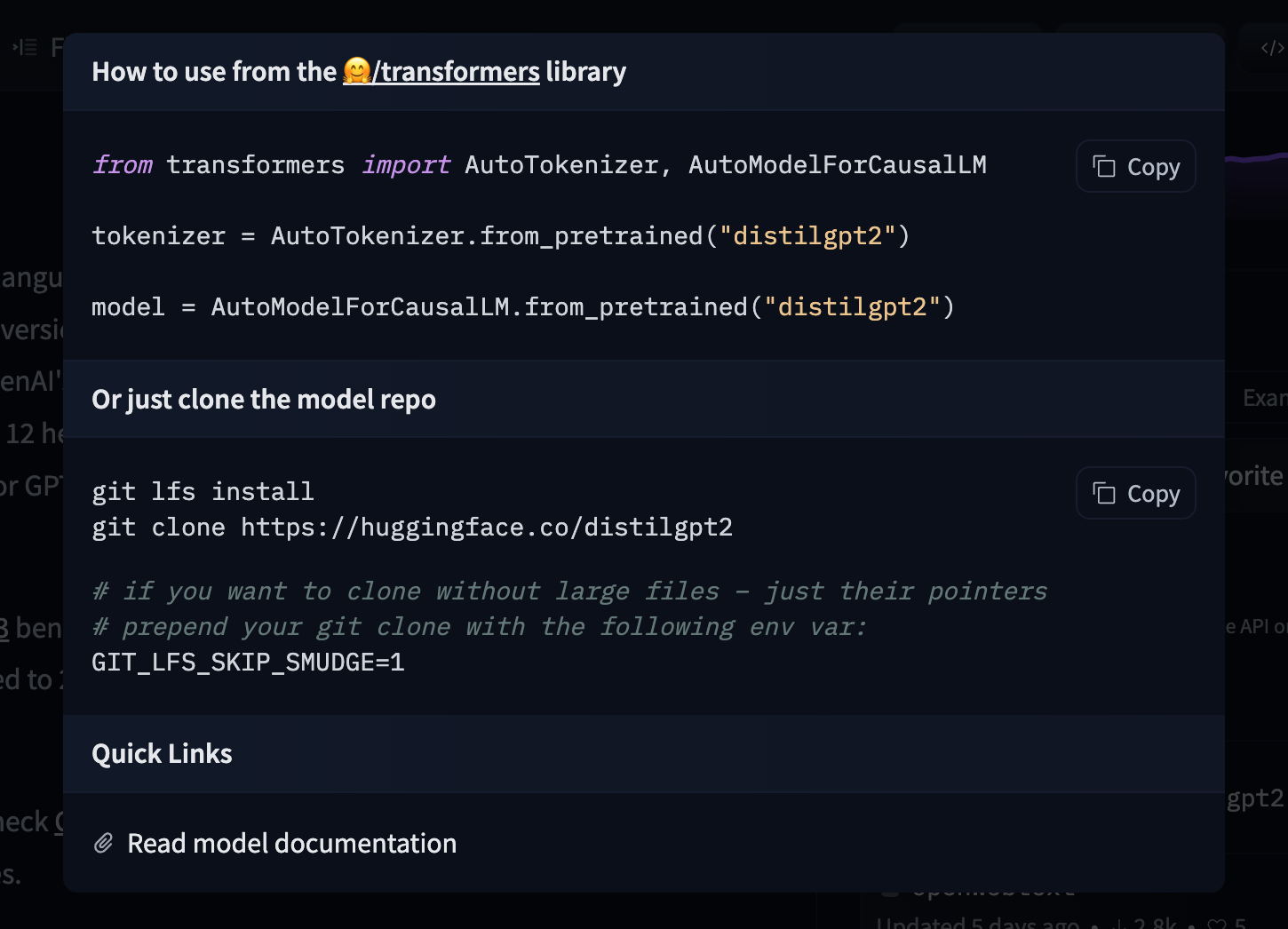

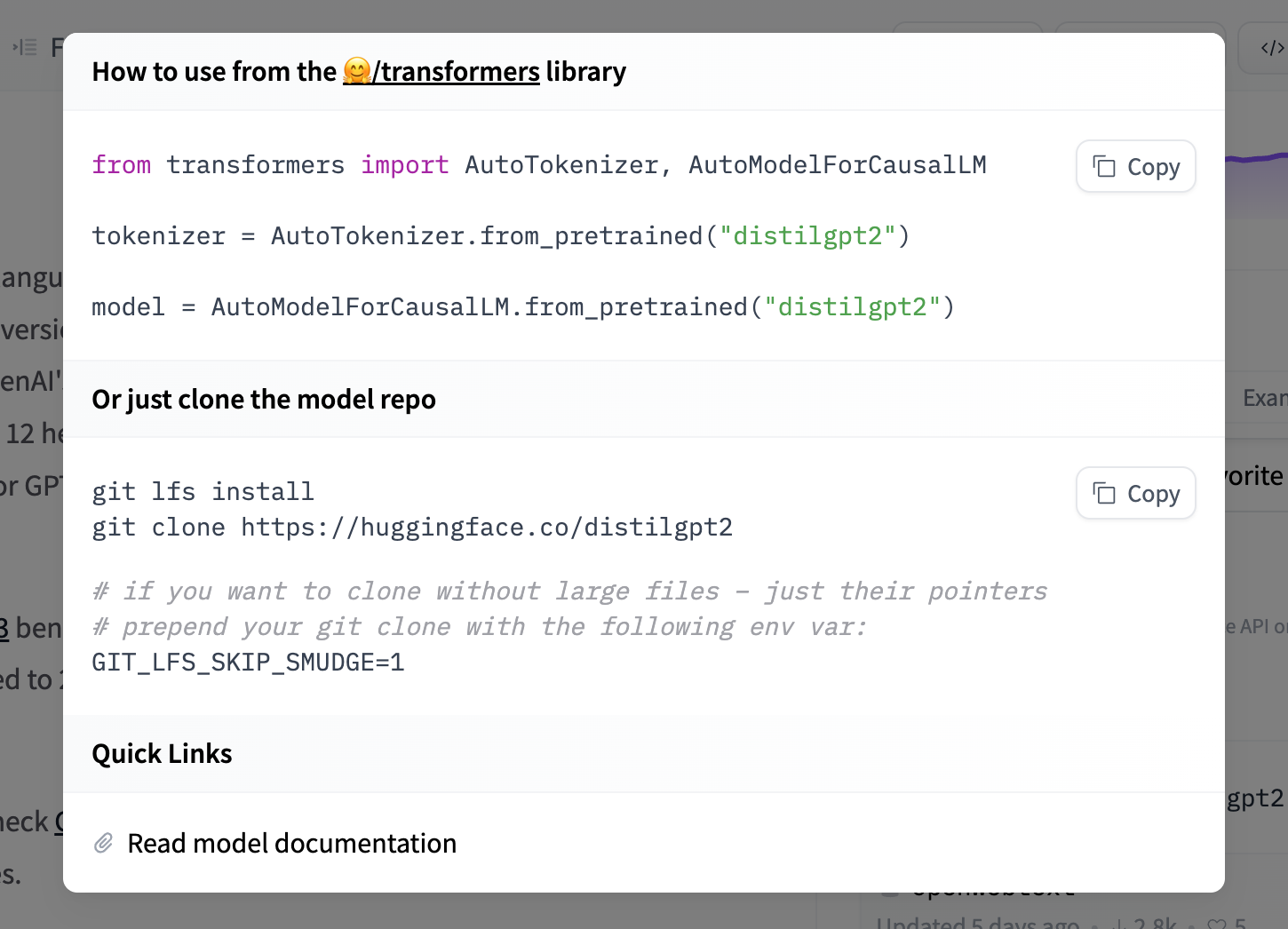

Models Hugging Face Inference endpoints not only allows you to customize your inference handler, but it also allows you to provide a custom container image. those can be public images like tensorflow serving:2.7.3 or private images hosted on docker hub, aws ecr, azure acr, or google gcr. In this tutorial, you will learn how to use docker to create a container with all the necessary code and artifacts to load hugging face models and to expose them as web service endpoints using flask.

Downloading Models Discover if you can easily package any hugging face model as a container image. learn about the recommended onboarding process and how it applies to custom m. In this article, we’ll look at how to use the hugging face hosted llama model in a docker context, opening up new opportunities for natural language processing (nlp) enthusiasts and researchers. This repository contains a set of container images for training and serving hugging face models for different versions and libraries. the containers are structure and scoped base on the following principles:. First you have to download the model weights locally before building the image. then, update your dockerfile to use the copy command to copy these weights to the container. finally, tweak your code to load the model weights from the container path.

Downloading Models This repository contains a set of container images for training and serving hugging face models for different versions and libraries. the containers are structure and scoped base on the following principles:. First you have to download the model weights locally before building the image. then, update your dockerfile to use the copy command to copy these weights to the container. finally, tweak your code to load the model weights from the container path. This is useful in most cases, but not when building an image in docker, as the cache must be downloaded everytime. how can i set the cache files in the docker app's folder, and build the image properly?. Join the hugging face community if the available inference engines don’t meet your requirements, you can deploy your own custom solution as a docker container and run it on inference endpoints. In this guide, i’ll walk you through how i built a simple conversational ai model on my mac, containerized it with docker, and integrated it with the hugging face hub. Seamlessly integrate hugging face with docker in our guide. simplify deployment, boost ai projects, and enhance efficiency in just a few steps.

Comments are closed.