Building Llm Powered Applications Gradient Flow

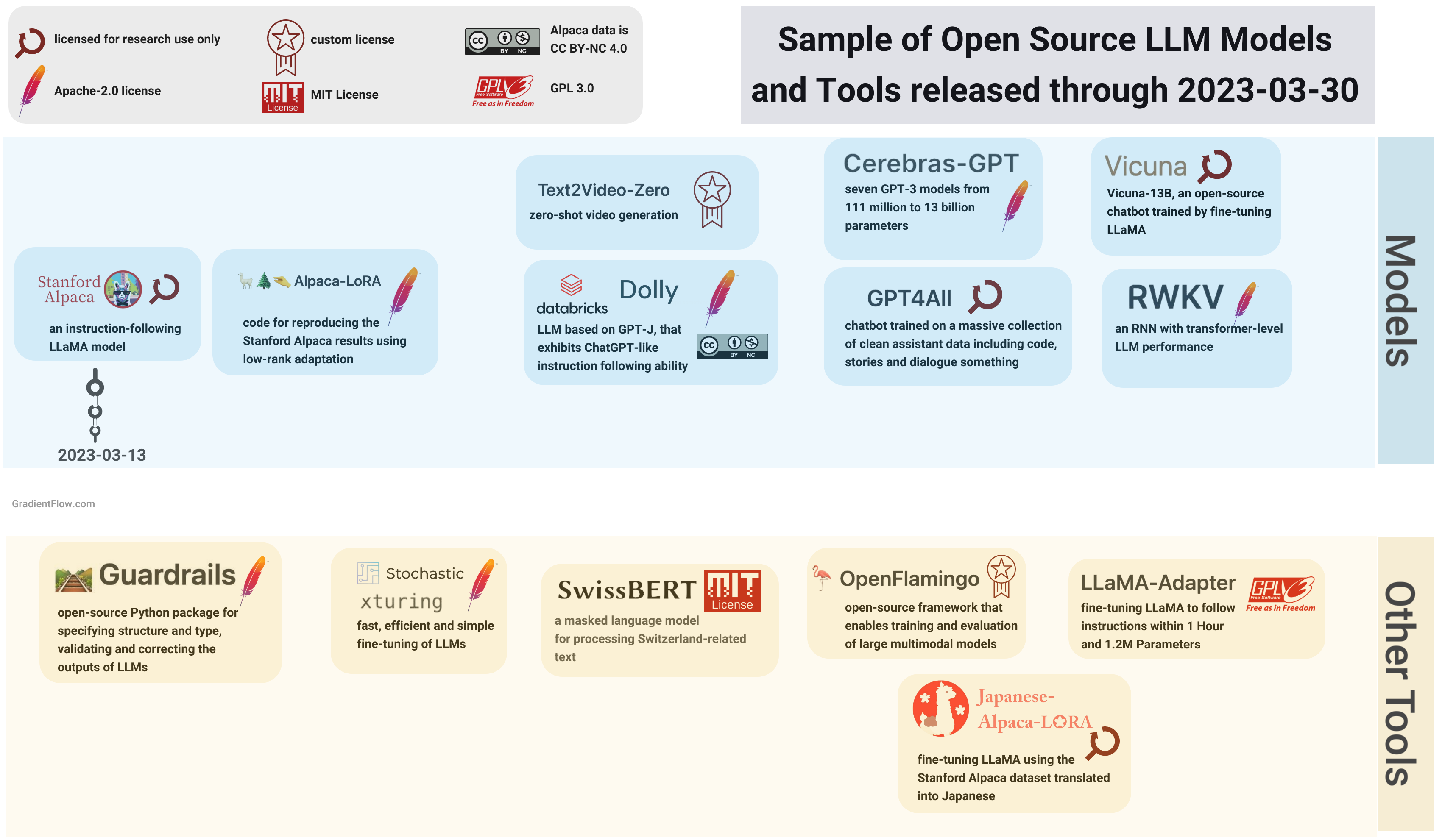

Building Llm Powered Applications Gradient Flow The proliferation of tools and resources for building llm powered applications has opened a new world of possibilities for developers. these tools allow developers to leverage the power of ai without having to learn the complexities of machine learning. Building an llm powered application can be challenging, especially if you’re new to the field. there are various steps involved in the process, from thinking of a feasible idea to deploying.

Building Llm Powered Applications Gradient Flow In this chapter, we explored the field of large language models, with a technical deep dive into their architecture, functioning and training process. This review takes the perspective of a generative ai (genai) architect tasked with designing, building, and deploying llm powered applications from the ground up. Waleed kadous kadous, chief scientist at anyscale, reveals his top strategies for building llm backed applications. he covers deploying open source models like llama and mistral, fine tuning techniques, grounding generation, rag, hardware options, evaluation methods, and more. With a hands on approach we provide readers with a step by step guide to implementing llm powered apps for specific tasks and using powerful frameworks like langchain.

Building Llm Powered Applications Gradient Flow Waleed kadous kadous, chief scientist at anyscale, reveals his top strategies for building llm backed applications. he covers deploying open source models like llama and mistral, fine tuning techniques, grounding generation, rag, hardware options, evaluation methods, and more. With a hands on approach we provide readers with a step by step guide to implementing llm powered apps for specific tasks and using powerful frameworks like langchain. When you train an llm, you’re building the scaffolding and neural networks to enable deep learning. The blog posts accompanying the release of these llms highlight a strong focus on scalability, adaptability, and ease of integration, which are crucial factors for teams building llm powered applications. This is an invaluable guide for anyone looking to take their llm usage to the next level. waleed draws on extensive real world experience to offer proven tips that can be applied right away. Moving ahead, with a focus on the python based, lightweight framework called langchain, we guide you through the process of creating intelligent agents capable of retrieving information from unstructured data and engaging with structured data using llms and powerful toolkits.

Architectural Enhancements In Recent Open Llms Gradient Flow When you train an llm, you’re building the scaffolding and neural networks to enable deep learning. The blog posts accompanying the release of these llms highlight a strong focus on scalability, adaptability, and ease of integration, which are crucial factors for teams building llm powered applications. This is an invaluable guide for anyone looking to take their llm usage to the next level. waleed draws on extensive real world experience to offer proven tips that can be applied right away. Moving ahead, with a focus on the python based, lightweight framework called langchain, we guide you through the process of creating intelligent agents capable of retrieving information from unstructured data and engaging with structured data using llms and powerful toolkits.

Building Llm Powered Apps What You Need To Know Gradient Flow This is an invaluable guide for anyone looking to take their llm usage to the next level. waleed draws on extensive real world experience to offer proven tips that can be applied right away. Moving ahead, with a focus on the python based, lightweight framework called langchain, we guide you through the process of creating intelligent agents capable of retrieving information from unstructured data and engaging with structured data using llms and powerful toolkits.

Comments are closed.