Backpropagation Explained How Neural Nets Learn

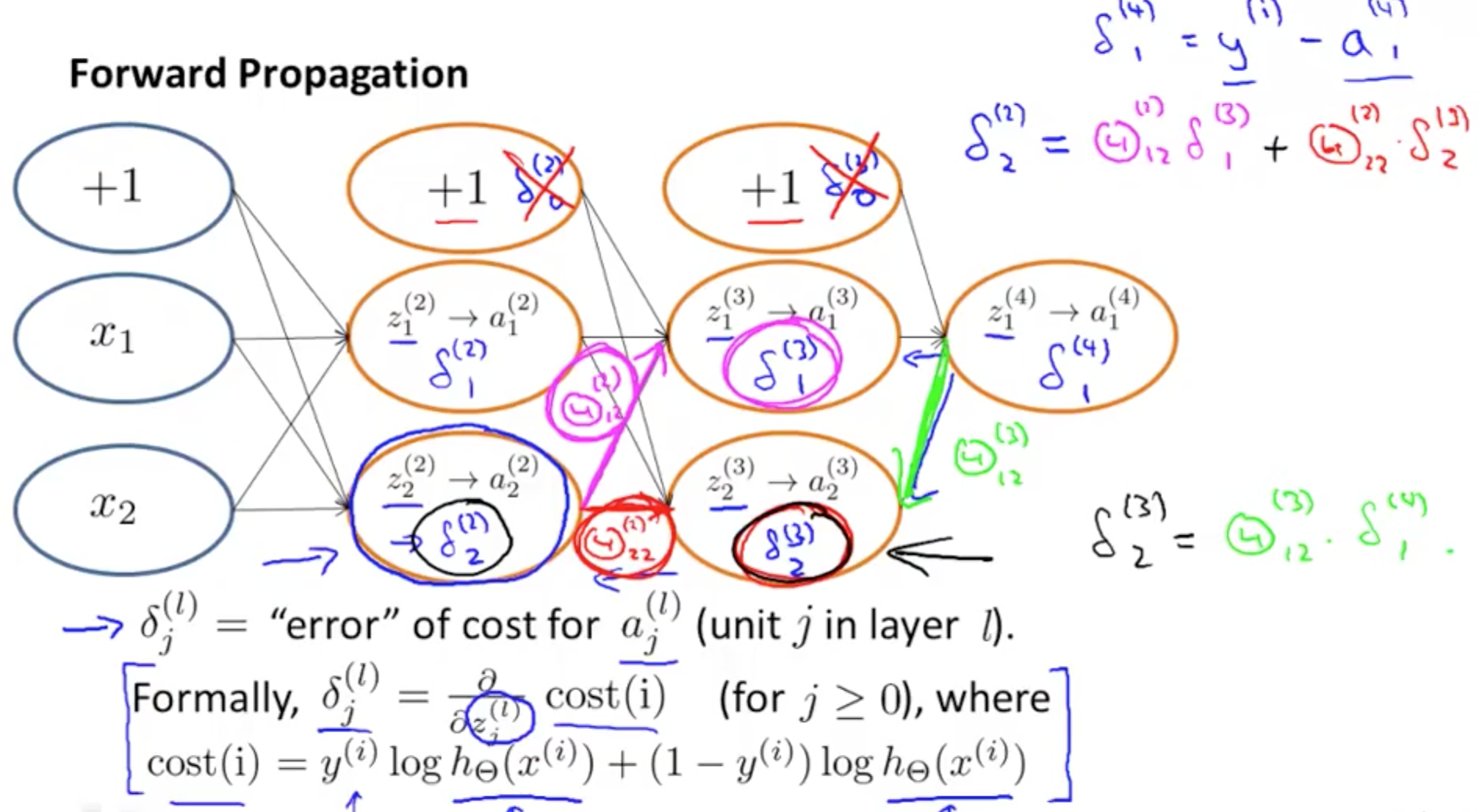

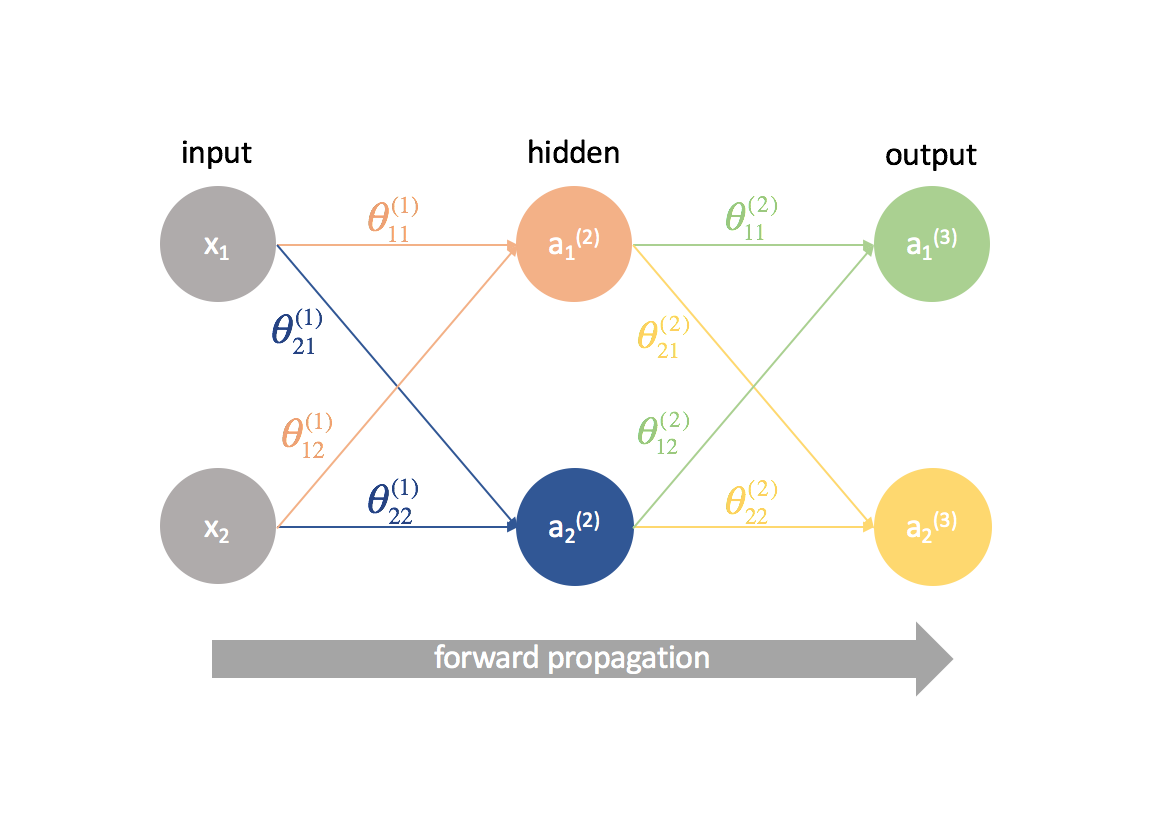

How Do Neural Networks Learn In machine learning, backpropagation is a gradient computation method commonly used for training a neural network in computing parameter updates. it is an efficient application of the chain rule to neural networks. The back propagation algorithm involves two main steps: the forward pass and the backward pass. 1. forward pass work. in forward pass the input data is fed into the input layer. these inputs combined with their respective weights are passed to hidden layers.

What Is Backpropagation In Neural Network Analyticslearn This is the whole trick of backpropagation: rather than computing each layer’s gradients independently, observe that they share many of the same terms, so we might as well calculate each shared term once and reuse them. this strategy, in general, is called dynamic programming. Backpropagation is a machine learning technique essential to the optimization of artificial neural networks. it facilitates the use of gradient descent algorithms to update network weights, which is how the deep learning models driving modern artificial intelligence (ai) “learn.”. In this post, we discuss how backpropagation works, and explain it in detail for three simple examples. the first two examples will contain all the calculations, for the last one we will only illustrate the equations that need to be calculated. Here we tackle backpropagation, the core algorithm behind how neural networks learn. if you followed the last two lessons or if you’re jumping in with the appropriate background, you know what a neural network is and how it feeds forward information.

Neural Networks Learning Machine Learning Deep Learning And In this post, we discuss how backpropagation works, and explain it in detail for three simple examples. the first two examples will contain all the calculations, for the last one we will only illustrate the equations that need to be calculated. Here we tackle backpropagation, the core algorithm behind how neural networks learn. if you followed the last two lessons or if you’re jumping in with the appropriate background, you know what a neural network is and how it feeds forward information. Backpropagation, short for "backward propagation of errors," is an algorithm for supervised learning of artificial neural networks using gradient descent. given an artificial neural network and an error function, the method calculates the gradient of the error function with respect to the neural network's weights. Backpropagation identifies which pathways are more influential in the final answer and allows us to strengthen or weaken connections to arrive at a desired prediction. it is such a fundamental component of deep learning that it will invariably be implemented for you in the package of your choosing. In this chapter i'll explain a fast algorithm for computing such gradients, an algorithm known as backpropagation. the backpropagation algorithm was originally introduced in the 1970s, but its importance wasn't fully appreciated until a famous 1986 paper by david rumelhart, geoffrey hinton, and ronald williams. Learn about backpropagation, its mechanics, coding in python, types, limitations, and alternative approaches.

Neural Networks Training With Backpropagation Backpropagation, short for "backward propagation of errors," is an algorithm for supervised learning of artificial neural networks using gradient descent. given an artificial neural network and an error function, the method calculates the gradient of the error function with respect to the neural network's weights. Backpropagation identifies which pathways are more influential in the final answer and allows us to strengthen or weaken connections to arrive at a desired prediction. it is such a fundamental component of deep learning that it will invariably be implemented for you in the package of your choosing. In this chapter i'll explain a fast algorithm for computing such gradients, an algorithm known as backpropagation. the backpropagation algorithm was originally introduced in the 1970s, but its importance wasn't fully appreciated until a famous 1986 paper by david rumelhart, geoffrey hinton, and ronald williams. Learn about backpropagation, its mechanics, coding in python, types, limitations, and alternative approaches.

Comments are closed.