Autoencoders In Deep Learning Tutorial Use Cases 2023 43 Off

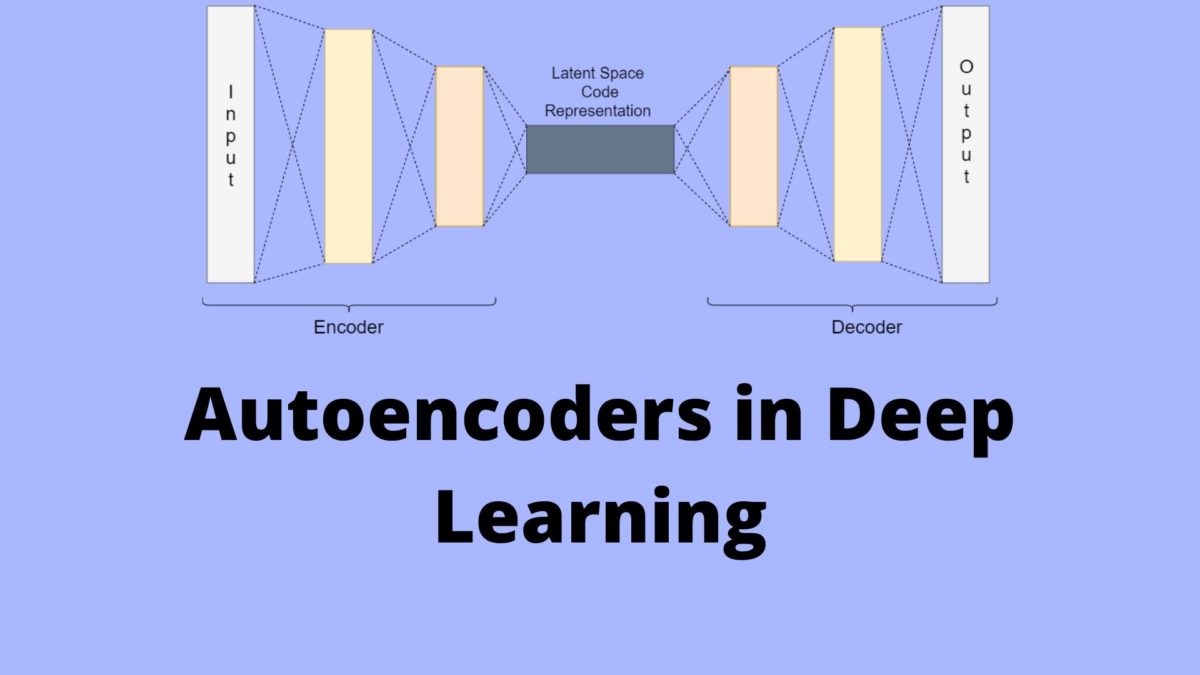

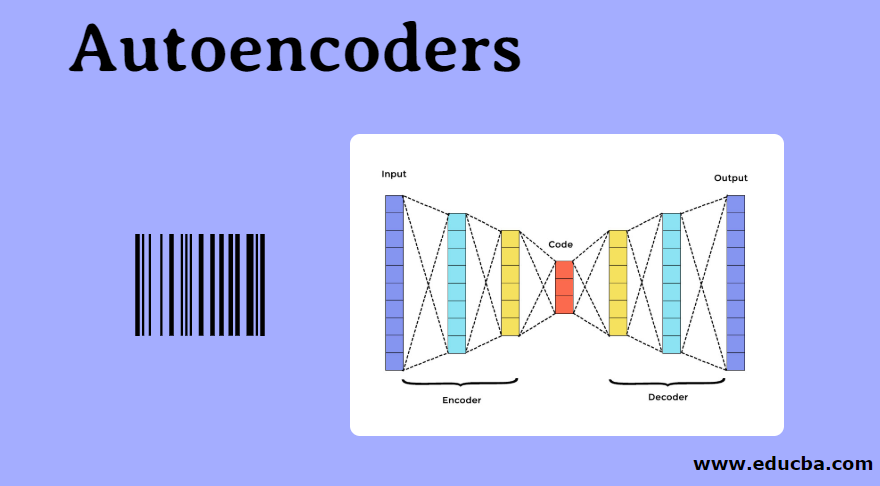

Autoencoders In Deep Learning Tutorial Use Cases 2023 43 Off Because autoencoders don't match a sample to a label. rather, they encode input distribution into common patterns (representations) along all samples and decode the representations back into input space. Both pca and autoencoder can do demension reduction, so what are the difference between them? in what situation i should use one over another?.

Autoencoders In Deep Learning Tutorial Use Cases 2023 43 Off Deep learning autoencoders variational bayes see similar questions with these tags. The idea is the same as with autoencoders or rbms translate many low level features (e.g. user reviews or image pixels) to the compressed high level representation (e.g. film genres or edges) but now weights are learned only from neurons that are spatially close to each other. Recently, i have been studying autoencoders. if i understood correctly, an autoencoder is a neural network where the input layer is identical to the output layer. so, the neural network tries to pr. I am trying to design some generative nn models on datasets of rgb images and was debating on whether i should be using dropout and or batch norm. here are my thoughts (i may be completely wrong):.

Autoencoders In Deep Learning Tutorial Use Cases 2023 43 Off Recently, i have been studying autoencoders. if i understood correctly, an autoencoder is a neural network where the input layer is identical to the output layer. so, the neural network tries to pr. I am trying to design some generative nn models on datasets of rgb images and was debating on whether i should be using dropout and or batch norm. here are my thoughts (i may be completely wrong):. 37 as per this and this answer, autoencoders seem to be a technique that uses neural networks for dimension reduction. i would like to additionally know what is a variational autoencoder (its main differences benefits over a "traditional" autoencoders) and also what are the main learning tasks these algorithms are used for. The first clear autoencoder presentation featuring a feedforward, multilayer neural network with a bottleneck layer was presented by kramer in 1991 (full text at ). he discusses dimensionality reduction and feature extraction and applications such as noise filtering, anomaly detection, and input estimation. variational autoencoders, referred to as "robust autoassociative neural networks", were. The objective would look exactly the same as above the kl divergence is still closed form (albeit the form now includes and ), and doesn't really show up elsewhere. a really easy way to think about this is to imagine you add a "scaling layer" at the very end of your encoder which adds to your mean and to your log variance. then at the very start of the decoder, you add an "inverse scaling. I am working on anomaly detection using an autoencoder neural network with $1$ hidden layer. this is an unsupervised setting, as i do not have previous examples of anomalies. the input data has pat.

10 Popular Deep Learning Algorithms In 2023 Askpython 37 as per this and this answer, autoencoders seem to be a technique that uses neural networks for dimension reduction. i would like to additionally know what is a variational autoencoder (its main differences benefits over a "traditional" autoencoders) and also what are the main learning tasks these algorithms are used for. The first clear autoencoder presentation featuring a feedforward, multilayer neural network with a bottleneck layer was presented by kramer in 1991 (full text at ). he discusses dimensionality reduction and feature extraction and applications such as noise filtering, anomaly detection, and input estimation. variational autoencoders, referred to as "robust autoassociative neural networks", were. The objective would look exactly the same as above the kl divergence is still closed form (albeit the form now includes and ), and doesn't really show up elsewhere. a really easy way to think about this is to imagine you add a "scaling layer" at the very end of your encoder which adds to your mean and to your log variance. then at the very start of the decoder, you add an "inverse scaling. I am working on anomaly detection using an autoencoder neural network with $1$ hidden layer. this is an unsupervised setting, as i do not have previous examples of anomalies. the input data has pat.

Comments are closed.