Autoencoder For Anomaly Detection Fraud Detection Python

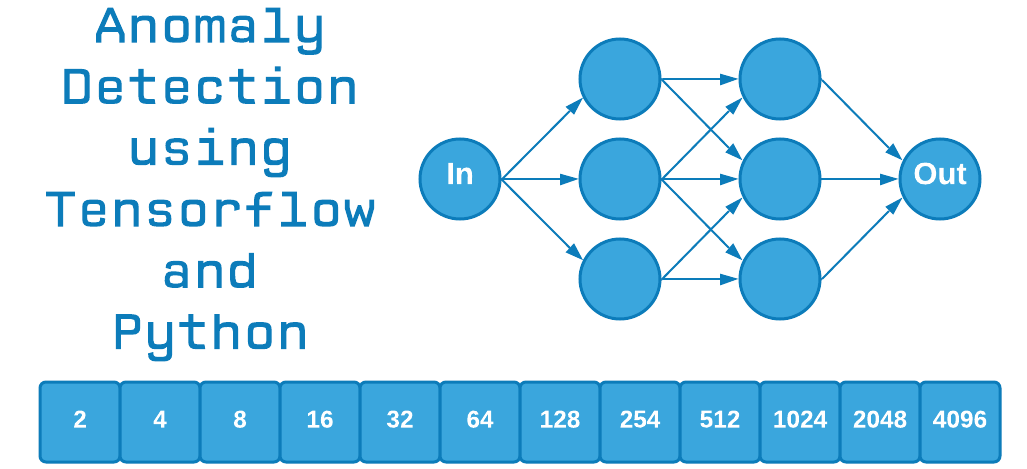

Github Arbarbn Fraud Detection Projects Using Python I Detect Fraud Unet architecture is like first half encoder and second half decoder . there are different variations of autoencoders like sparse , variational etc. they all compress and decompress the data but th. If you want to create an autoencoder you need to understand that you're going to reverse process after encoding. that means that if you have three convolutional layers with filters in this order: 64, 32, 16; you should make the next group of convolutional layers to do the inverse: 16, 32, 64. that's the reason of why your algorithm is not learning.

Python Anomaly Detection Guide Statistical Methods For The input to the autoencoder is then > (730,128,1) but when i plot the original signal against the decoded, they are very different!! appreciate your help on this. The autoencoder then works by storing inputs in terms of where they lie on the linear image of . observe that absent the non linear activation functions, an autoencoder essentially becomes equivalent to pca — up to a change in basis. a useful exercise might be to consider why this is. I'm relatively new to the field, but i'd like to know how do variational autoencoders fare compared to transformers?. I want to know if there is a difference between an autoencoder and an encoder decoder.

Fraud And Anomaly Detection With Artificial Neural Networks Using I'm relatively new to the field, but i'd like to know how do variational autoencoders fare compared to transformers?. I want to know if there is a difference between an autoencoder and an encoder decoder. Thank you! pre trained network by using rbm or autoencoder on lots of unlabeled data allows more faster fine tuning than any weight initialization. i.e. if i want to train network only once then fast way is "fast weight initialization", but if i want to train network many times then faster way is only once do pre trained model by using rbm autoencoder and for each training to use this pre. Hey so the keras implementation of cosine similarity is called as cosine proximity. it just has one small change, that being cosine proximity = 1*(cosine similarity) of the two vectors. this is done to keep in line with loss functions being minimized in gradient descent. to elaborate, higher the angle between x pred and x true. lower is the cosine value. this value approaches 0 as x pred and. 1 before asking 'how can autoencoder be used to cluster data?' we must first ask 'can autoencoders cluster data?' since an autoencoder learns to recreate the data points from the latent space. if we assume that the autoencoder maps the latent space in a “continuous manner”, the data points that are from the same cluster must be mapped together. Note that in the case of input values in range [0,1] you can use binary crossentropy, as it is usually used (e.g. keras autoencoder tutorial and this paper). however, don't expect that the loss value becomes zero since binary crossentropy does not return zero when both prediction and label are not either zero or one (no matter they are equal or.

Comments are closed.