Approximated Prompt Tuning For Vision Language Pre Trained Models Deepai

Approximated Prompt Tuning For Vision Language Pre Trained Models Deepai Based on this finding, we propose a novel approximated prompt tuning (apt) approach towards efficient vl transfer learning. to validate apt, we apply it to two representative vlp models, namely vilt and meter, and conduct extensive experiments on a bunch of downstream tasks. Inspired by the recently popular prompt tuning, we first prove that the processed visual features can be also projected onto the semantic space of plms and act as prompt tokens to bridge the gap between single and multi modal learning.

Class Aware Visual Prompt Tuning For Vision Language Pre Trained Model Based on this finding, we propose a novel approximated prompt tuning (apt) approach towards efficient vl transfer learning. to validate apt, we apply it to two representative vlp models,. To address this issue, efficient adaptation methods such as prompt tuning have been proposed. we explore the idea of prompt tuning with multi task pre trained initialization and find it can significantly improve model performance. The proposed method demonstrates a new dual modality prompt tuning paradigm for tuning the large pretrained vision language model by simultaneously learning the visual and text prompts from the ends of both the text and image encoders. To this end, we propose parameter efficient prompt tuning (pro tuning) to adapt frozen vision models to various downstream vision tasks. the key to pro tuning is prompt based tuning, i.e., learning task specific vision prompts for downstream input images with the pre trained model frozen.

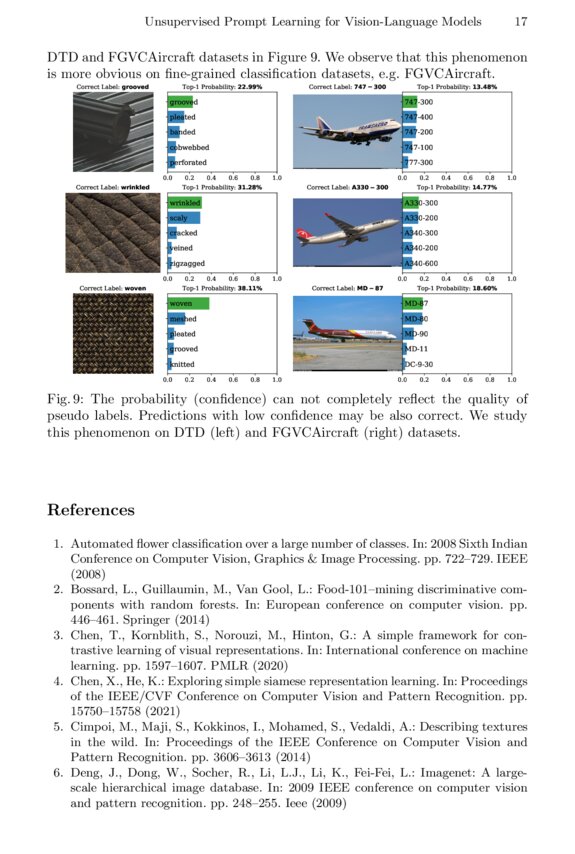

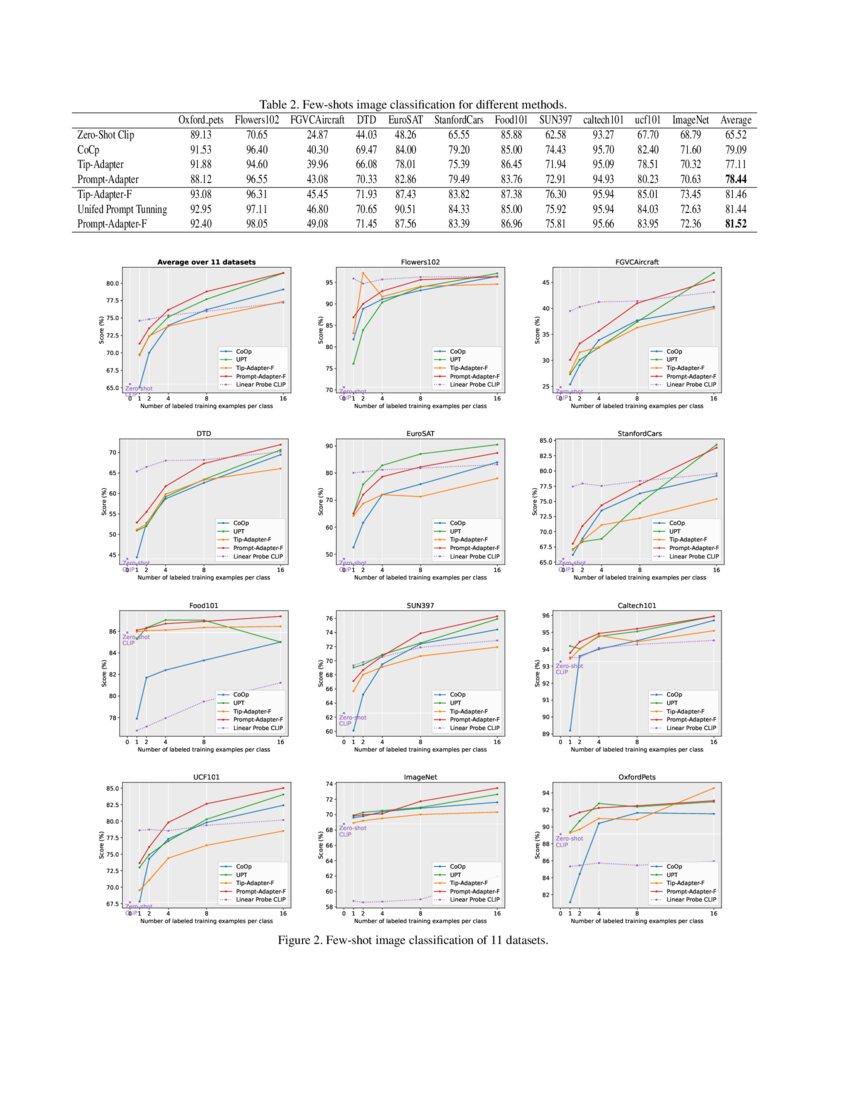

Unsupervised Prompt Learning For Vision Language Models Deepai The proposed method demonstrates a new dual modality prompt tuning paradigm for tuning the large pretrained vision language model by simultaneously learning the visual and text prompts from the ends of both the text and image encoders. To this end, we propose parameter efficient prompt tuning (pro tuning) to adapt frozen vision models to various downstream vision tasks. the key to pro tuning is prompt based tuning, i.e., learning task specific vision prompts for downstream input images with the pre trained model frozen. E already high computational overhead. in this paper, we revisit the principle of prompt tuning for transformer based vlp models and reveal that the impact of soft prompt tokens can be.

Prompt Tuning Based Adapter For Vision Language Model Adaption Deepai E already high computational overhead. in this paper, we revisit the principle of prompt tuning for transformer based vlp models and reveal that the impact of soft prompt tokens can be.

Approximated Prompt Tuning For Vision Language Pre Trained Models Deepai

Comments are closed.