Apache Spark Ecosystem And Spark Components Data Science Learning

Apache Spark Ecosystem Complete Spark Components Guide 1 Objective In this tutorial on apache spark ecosystem, we will learn what is apache spark, what is the ecosystem of apache spark. it also covers components of spark ecosystem like spark core component, spark sql, spark streaming, spark mllib, spark graphx and sparkr. In this article, we will discuss the different components of apache spark. spark processes a huge amount of datasets and it is the foremost active apache project of the current time. spark is written in scala and provides api in python, scala, java, and r.

Apache Spark Ecosystem Complete Spark Components Guide Dataflair Apache spark is a multi language engine for executing data engineering, data science, and machine learning on single node machines or clusters. In this spark ecosystem tutorial, we will discuss about core ecosystem components of apache spark like spark sql, spark streaming, spark machine learning (mllib), spark graphx, and spark r. For aspiring data engineers, learning spark is a crucial step in mastering the data engineering landscape. this guide will walk you through the essential concepts and learning path for. Understand the main components of the apache spark ecosystem—core, sql, streaming, and mllib. learn what each module does with real world examples and beginner friendly explanations.

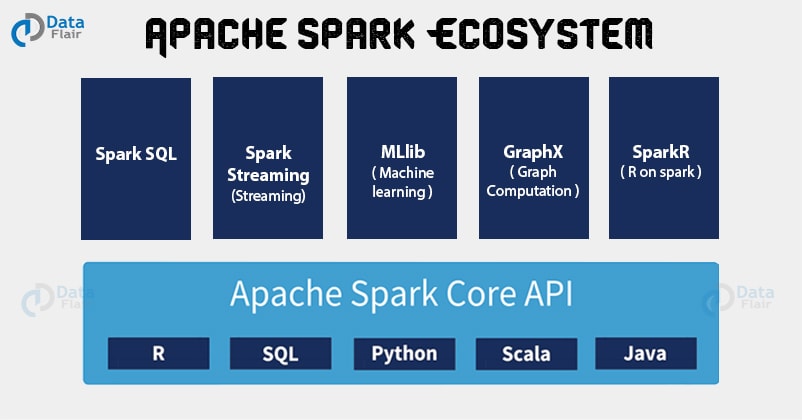

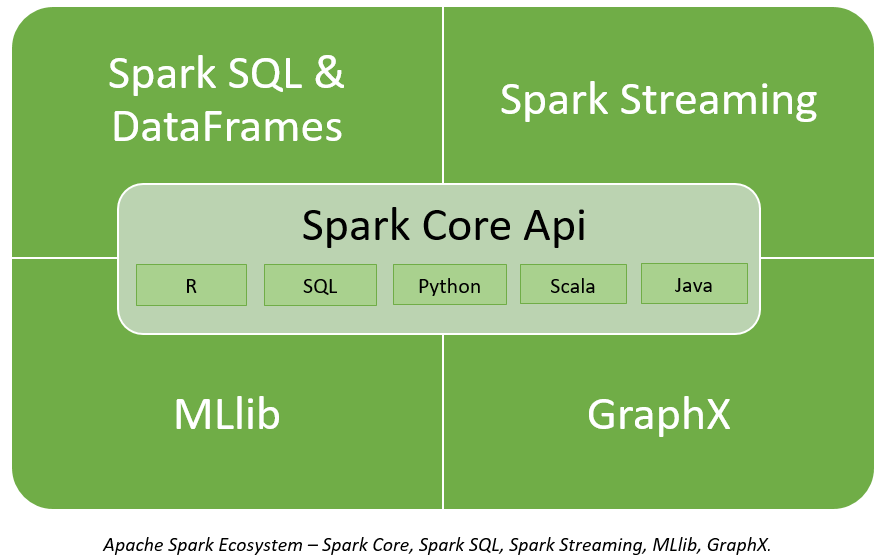

Apache Spark Ecosystem Complete Spark Components Guide Dataflair For aspiring data engineers, learning spark is a crucial step in mastering the data engineering landscape. this guide will walk you through the essential concepts and learning path for. Understand the main components of the apache spark ecosystem—core, sql, streaming, and mllib. learn what each module does with real world examples and beginner friendly explanations. Apache spark consists of spark core engine, spark sql, spark streaming, mllib, graphx and spark r. you can use spark core engine along with any of the other five components mentioned above. it is not necessary to use all the spark components together. To answer this question, let’s introduce the apache spark ecosystem and explain the spark components which make apache spark fast and reliable. a lot of these spark components were built to resolve the issues that cropped up while using hadoop mapreduce. Let's understand each spark component in detail. the spark core is the heart of spark and performs the core functionality. it holds the components for task scheduling, fault recovery, interacting with storage systems and memory management. the spark sql is built on the top of spark core. it provides support for structured data. Upskill your data engineering skills with our microsoft fabric ecosystem training course. this blog gives a brief introduction to different components in spark ecosystem.

Apache Spark Ecosystem And Spark Components Data Science Learning Apache spark consists of spark core engine, spark sql, spark streaming, mllib, graphx and spark r. you can use spark core engine along with any of the other five components mentioned above. it is not necessary to use all the spark components together. To answer this question, let’s introduce the apache spark ecosystem and explain the spark components which make apache spark fast and reliable. a lot of these spark components were built to resolve the issues that cropped up while using hadoop mapreduce. Let's understand each spark component in detail. the spark core is the heart of spark and performs the core functionality. it holds the components for task scheduling, fault recovery, interacting with storage systems and memory management. the spark sql is built on the top of spark core. it provides support for structured data. Upskill your data engineering skills with our microsoft fabric ecosystem training course. this blog gives a brief introduction to different components in spark ecosystem.

Apache Spark For Machine Learning And Data Science Reason Town Let's understand each spark component in detail. the spark core is the heart of spark and performs the core functionality. it holds the components for task scheduling, fault recovery, interacting with storage systems and memory management. the spark sql is built on the top of spark core. it provides support for structured data. Upskill your data engineering skills with our microsoft fabric ecosystem training course. this blog gives a brief introduction to different components in spark ecosystem.

Apache Spark In Big Data And Data Science Ecosystem Folio3 Ai

Comments are closed.