Adaboost And Xgboost Models Boosting Models Run Multiple Decision Trees

Adaboost And Xgboost Models Boosting Models Run Multiple Decision Trees In this article, we’ll explore how adaboost and xgboost work, highlight their key differences, and help you understand when to choose each one. by the end, you’ll have a clear understanding of when and how to use each of these algorithms. Xgboost and adaboost are two popular boosting algorithms used in machine learning for classification and regression tasks. both algorithms involve training a sequence of weak learners to.

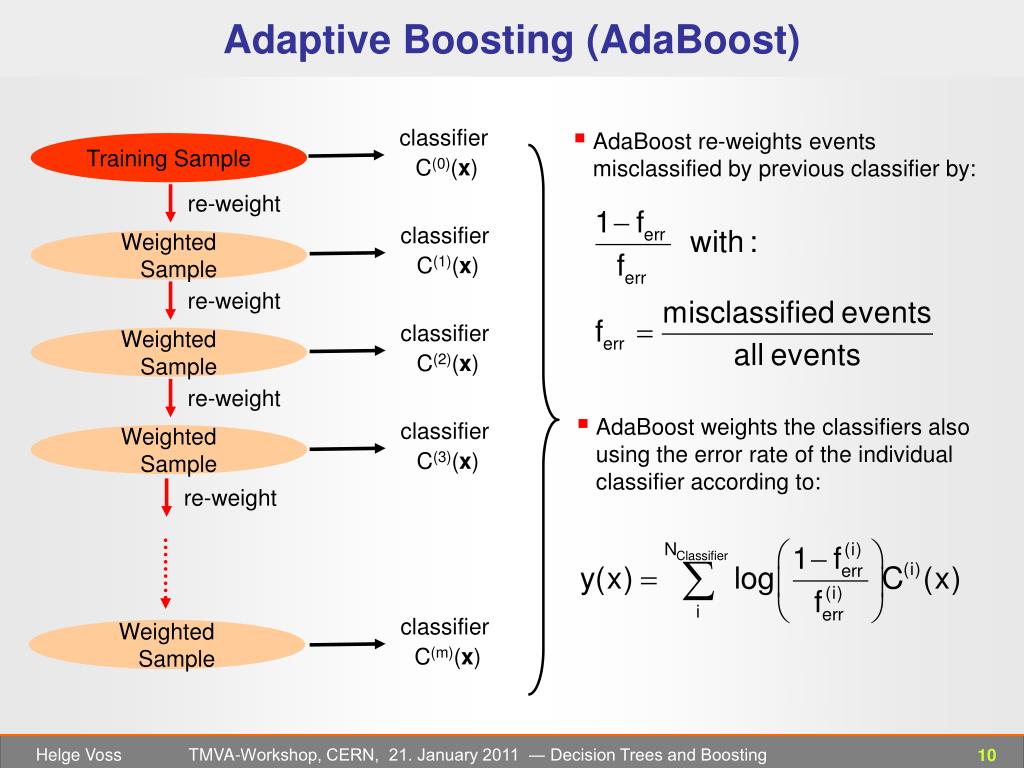

Ppt Decision Trees And Boosting Powerpoint Presentation Free Boosting procedures are examined adaboost (ad. ptive boosting) and xgboost (extreme gradient boost). adaboost is a boosting group of calculations. this kind of student is portrayed by more consideration on the wrongly arranged examples during preparing, . odifying the example dispersion, and rehashing this activity until the quantity of tra. On the other hand, there’s adaboost (adaptive boosting), another powerful tool known for creating strong predictive models. in this text, we’ll dive deep into these two titans of boosting techniques: their similarities, differences, pros & cons. Description: evaluates multiple classification algorithms, including decision trees, random forests, and adaboost, on the breast cancer dataset. the models are compared based on accuracy. 5. gradient boosting with decision trees. This comprehensive guide explores the fundamental differences, strengths, and limitations of adaboost vs xgboost vs gradient boost, helping you make informed decisions for your next machine learning project.

Gradient Boosting Decision Trees And Xgboost With Cuda Nvidia Description: evaluates multiple classification algorithms, including decision trees, random forests, and adaboost, on the breast cancer dataset. the models are compared based on accuracy. 5. gradient boosting with decision trees. This comprehensive guide explores the fundamental differences, strengths, and limitations of adaboost vs xgboost vs gradient boost, helping you make informed decisions for your next machine learning project. Thankfully, we can, and in this chapter, we learn several ways to build stronger models by combining weaker ones. the two main methods we learn in this chapter are bagging and boosting. in a nutshell, bagging consists of constructing a few models in a random way and joining them together. Boosting and weak learners: xgboost is an ensemble method that combines the predictions of multiple weak learners (usually decision trees) to create a strong learner. This article will explore how boosting classification models can effectively predict customer churn and guide you in choosing the best model for your specific needs. Explore the adaboost boosting algorithm, compare it with gradient boosting and xgboost, and understand when and why to use boosting methods in your machine learning projects.

Decision Curve For 8 Models Adaboost Adaptive Boosting Ann Thankfully, we can, and in this chapter, we learn several ways to build stronger models by combining weaker ones. the two main methods we learn in this chapter are bagging and boosting. in a nutshell, bagging consists of constructing a few models in a random way and joining them together. Boosting and weak learners: xgboost is an ensemble method that combines the predictions of multiple weak learners (usually decision trees) to create a strong learner. This article will explore how boosting classification models can effectively predict customer churn and guide you in choosing the best model for your specific needs. Explore the adaboost boosting algorithm, compare it with gradient boosting and xgboost, and understand when and why to use boosting methods in your machine learning projects.

How To Run Decision Tree Analysis With Xgboost In R Tidymodels Series This article will explore how boosting classification models can effectively predict customer churn and guide you in choosing the best model for your specific needs. Explore the adaboost boosting algorithm, compare it with gradient boosting and xgboost, and understand when and why to use boosting methods in your machine learning projects.

Comments are closed.