A Comprehensive Guide To The Gpt Generative Pre Trained Transformer Model

Generative Pre Trained Transformer 3 Gpt 3 Data Science In this article, we will examine the process involved in training a gpt model from scratch, including pre processing the data, tweaking the hyperparameters and fine tuning. This review provides a detailed overview of the generative pre trained transformer, including its architecture, working process, training procedures, enabling technologies, and its impact on various applications.

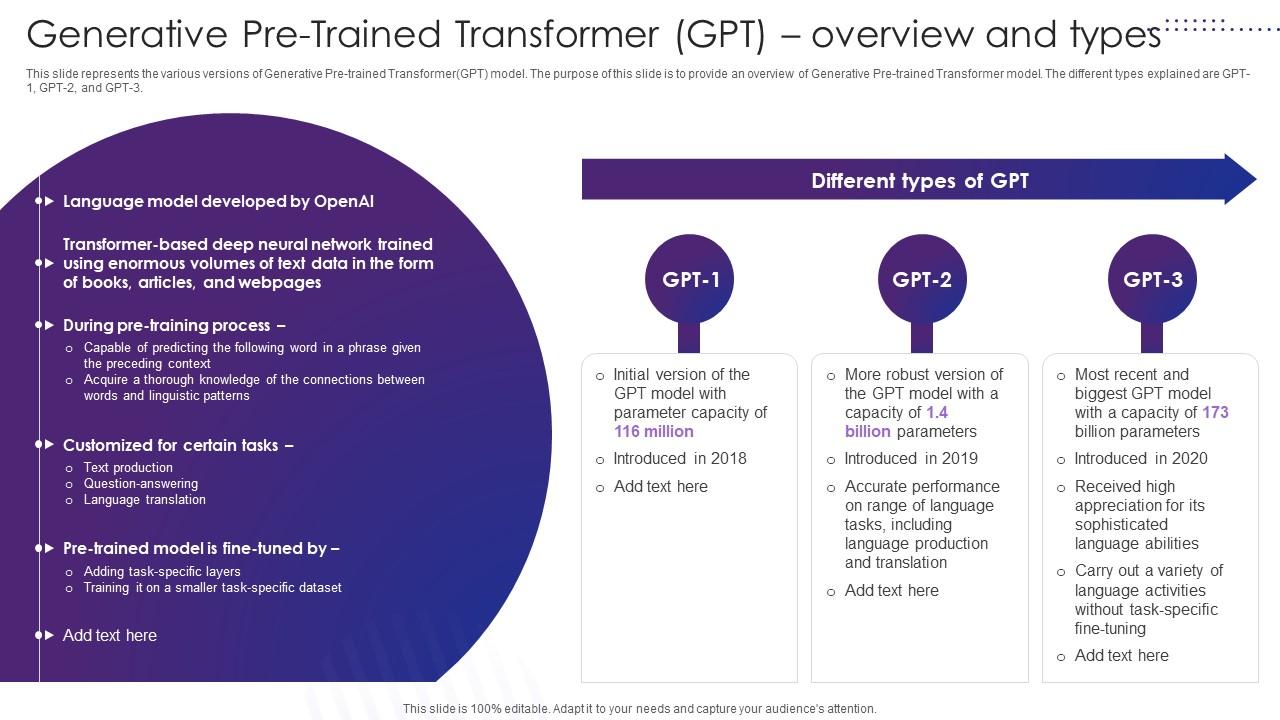

Generative Pre Trained Transformer Gpt Overview And Types Open Ai Pytorch is a deep learning framework that provides flexibility and speed, while the transformers library by hugging face offers pre trained models and tokenizers, including gpt 2. to effectively train a machine learning model like gpt, it is crucial to preprocess and prepare the text data properly. The comprehensive review aims to provide readers with a comprehensive insight into gpt (generative pre trained transformer) models, including architectural components, training methods, and real world applications. This article explains generative pre trained transformers (gpts), detailing their capabilities in writing, answering, summarizing, and conversing. it explores the underlying mechanisms and effectiveness of gpt models. Gpt, or generative pre trained transformer, is a cutting edge language model created by openai. it is designed to understand and generate human like text based on extensive training on large amounts of data.

Generative Pre Trained Transformer Gpt Overview And Types Ppt Powerpoint This article explains generative pre trained transformers (gpts), detailing their capabilities in writing, answering, summarizing, and conversing. it explores the underlying mechanisms and effectiveness of gpt models. Gpt, or generative pre trained transformer, is a cutting edge language model created by openai. it is designed to understand and generate human like text based on extensive training on large amounts of data. Gpt models are designed to generate text by predicting subsequent words in a sequence, and have been applied to tasks such as text generation, translation, summarization, and question answering. the latest version, as of the knowledge cutoff date, is gpt 4. Gpt (generative pre trained transformer)— a comprehensive review on enabling technologies, potential applications, emerging challenges, and future directions published in: ieee access ( volume: 12 ). Training: these models are pre trained on a large corpus of text data, enabling them to understand and generate text in a conversational manner. gpt 1: introduced in 2018, it was the first model to use the transformer architecture for language generation. To address these issues, we propose batterygpt, a frozen pre trained generative pre trained transformer (gpt) framework tailored for lithium ion battery energy forecasting. instead of retraining the entire model, our approach fine tunes only the input embedding and output projection layers, enabling efficient adaptation to varied battery.

Evolution Of Gpt Models Gpt Generative Pre Trained Transformer Api Gpt models are designed to generate text by predicting subsequent words in a sequence, and have been applied to tasks such as text generation, translation, summarization, and question answering. the latest version, as of the knowledge cutoff date, is gpt 4. Gpt (generative pre trained transformer)— a comprehensive review on enabling technologies, potential applications, emerging challenges, and future directions published in: ieee access ( volume: 12 ). Training: these models are pre trained on a large corpus of text data, enabling them to understand and generate text in a conversational manner. gpt 1: introduced in 2018, it was the first model to use the transformer architecture for language generation. To address these issues, we propose batterygpt, a frozen pre trained generative pre trained transformer (gpt) framework tailored for lithium ion battery energy forecasting. instead of retraining the entire model, our approach fine tunes only the input embedding and output projection layers, enabling efficient adaptation to varied battery.

3 Hundred Gpt Generative Pre Trained Transformer Royalty Free Images Training: these models are pre trained on a large corpus of text data, enabling them to understand and generate text in a conversational manner. gpt 1: introduced in 2018, it was the first model to use the transformer architecture for language generation. To address these issues, we propose batterygpt, a frozen pre trained generative pre trained transformer (gpt) framework tailored for lithium ion battery energy forecasting. instead of retraining the entire model, our approach fine tunes only the input embedding and output projection layers, enabling efficient adaptation to varied battery.

Comments are closed.