A Comparative Analysis Of Different Llm Evaluation Metrics By

A Comparative Analysis Of Different Llm Evaluation Metrics By Such a method requires this study's writers to conduct a comparative analysis of various benchmark llms to explain the viability of different evaluation metrics. There are a plethora of metrics and techniques available to evaluate the llm responses, among which we will discuss the frequently used ones.

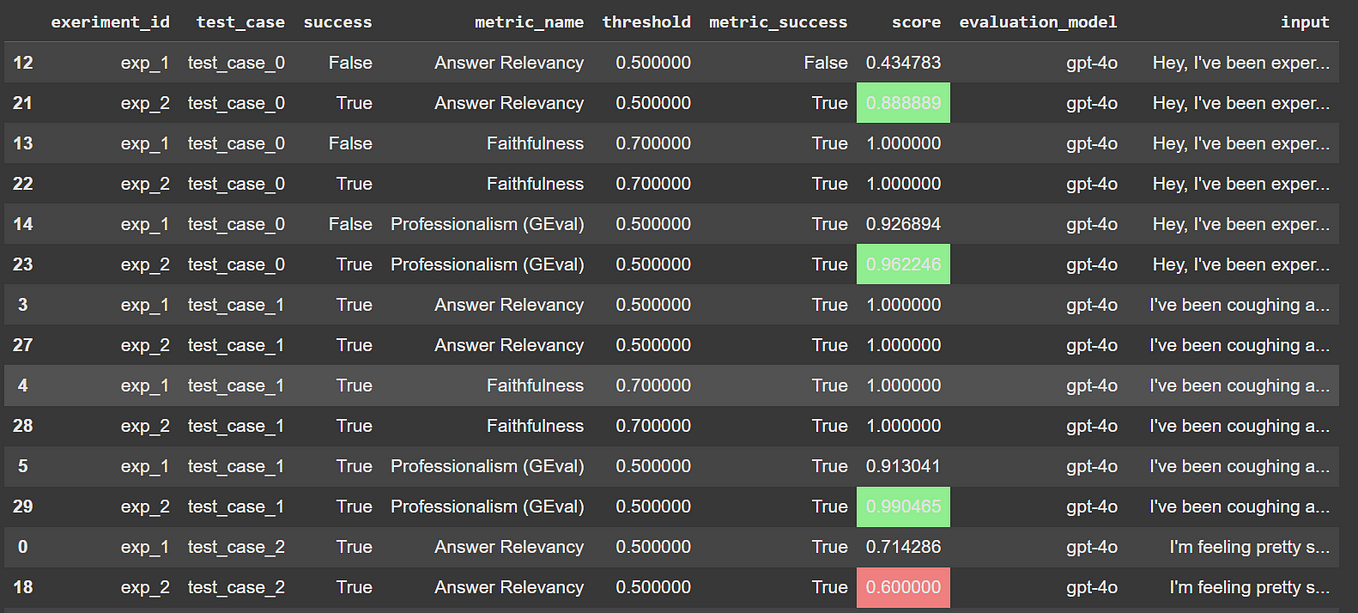

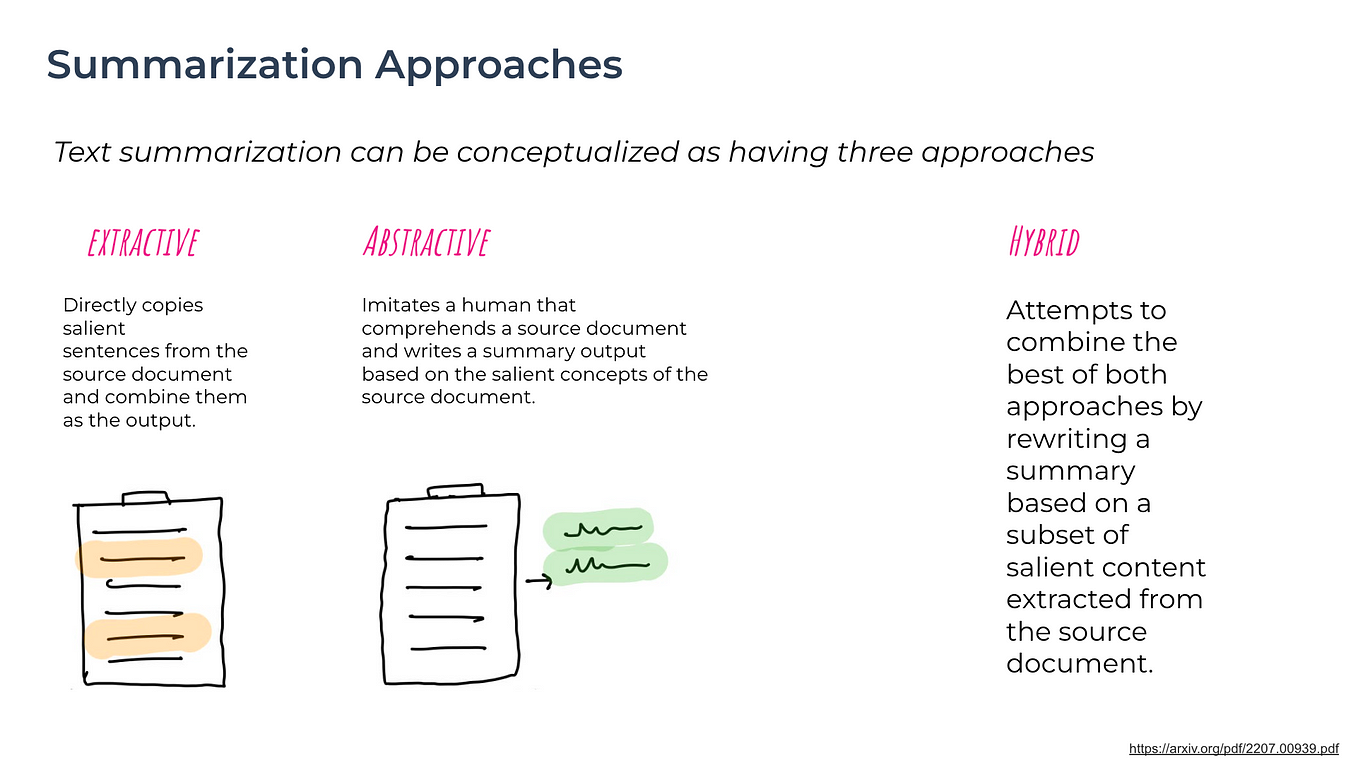

A Comparative Analysis Of Different Llm Evaluation Metrics By We created a summary of the best datasets and metrics for your specific aims: 1. benchmark selection. a combination of benchmarks is often necessary to comprehensively evaluate a language model’s performance. a set of benchmark tasks is selected to cover a wide range of language related challenges. In collaboration with deloitte, this study compares traditional natural language processing (nlp) metrics with the emerging llm as a judge paradigm across tasks, in cluding retrieval, response accuracy, toxicity, bias, hallucination, summarization, tone, and readability. Discover key llm evaluation metrics to measure performance, fairness, bias, and accuracy in large language models effectively. In this article, we will explore the current metrics widely used for llm evaluations, key challenges that need to be overcome, and how golden evaluation data sets can be used for fine tuning the metrics for industry specific domains.

A Comparative Analysis Of Different Llm Evaluation Metrics By Discover key llm evaluation metrics to measure performance, fairness, bias, and accuracy in large language models effectively. In this article, we will explore the current metrics widely used for llm evaluations, key challenges that need to be overcome, and how golden evaluation data sets can be used for fine tuning the metrics for industry specific domains. Llm evaluation metrics range from using llm judges for custom criteria to ranking metrics and semantic similarity. this guide covers key methods for llm evaluation and benchmarking. Automated metrics form the first layer. here, metrics like bleu, rouge, f1 score, bertscore, exact match, and gptscore scan for clear cut errors and successes. the next layer consists of human reviewers. they bring in likert scales, expert commentary, and head to head rankings. Etrics which quantifying the performance of llms play a pivotal role. this paper offers a comprehensive ex ploration of llm evaluation from a metrics perspective, providing insig. ts into the selection and interpretation of metrics currently in use. our main goal is to eluc. Llm evaluation metrics such as answer correctness, semantic similarity, and hallucination, are metrics that score an llm system's output based on criteria you care about. they are critical to llm evaluation, as they help quantify the performance of different llm systems, which can just be the llm itself.

A Comparative Analysis Of Different Llm Evaluation Metrics By Llm evaluation metrics range from using llm judges for custom criteria to ranking metrics and semantic similarity. this guide covers key methods for llm evaluation and benchmarking. Automated metrics form the first layer. here, metrics like bleu, rouge, f1 score, bertscore, exact match, and gptscore scan for clear cut errors and successes. the next layer consists of human reviewers. they bring in likert scales, expert commentary, and head to head rankings. Etrics which quantifying the performance of llms play a pivotal role. this paper offers a comprehensive ex ploration of llm evaluation from a metrics perspective, providing insig. ts into the selection and interpretation of metrics currently in use. our main goal is to eluc. Llm evaluation metrics such as answer correctness, semantic similarity, and hallucination, are metrics that score an llm system's output based on criteria you care about. they are critical to llm evaluation, as they help quantify the performance of different llm systems, which can just be the llm itself.

A Comparative Analysis Of Different Llm Evaluation Metrics By Etrics which quantifying the performance of llms play a pivotal role. this paper offers a comprehensive ex ploration of llm evaluation from a metrics perspective, providing insig. ts into the selection and interpretation of metrics currently in use. our main goal is to eluc. Llm evaluation metrics such as answer correctness, semantic similarity, and hallucination, are metrics that score an llm system's output based on criteria you care about. they are critical to llm evaluation, as they help quantify the performance of different llm systems, which can just be the llm itself.

A Comparative Analysis Of Different Llm Evaluation Metrics By

Comments are closed.