2021 03 10 Efficientnet Rethinking Model Scaling For Convolutional Neural Networks Paper

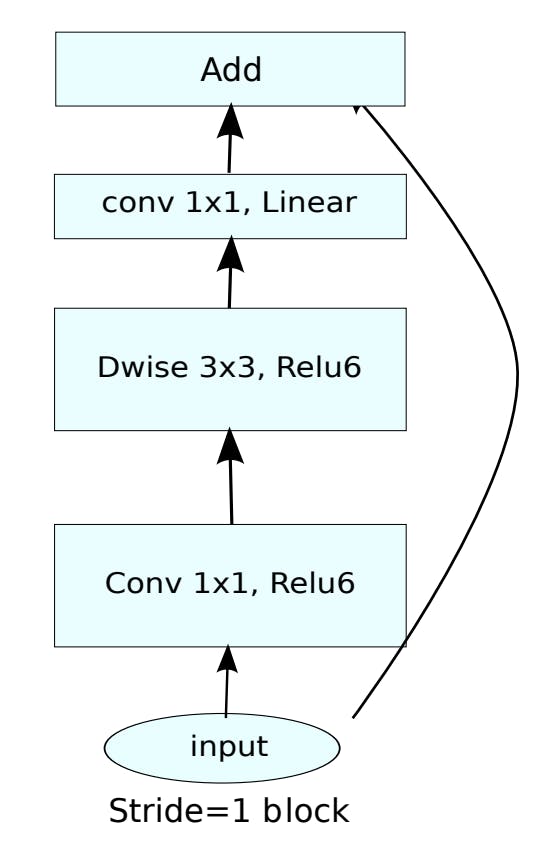

Efficientnet Rethinking Model Scaling For Convolutional Neural Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on scaling up mobilenets and resnet. Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on mobilenets and resnet.

Efficientnet Rethinking Model Scaling For Convolutional 57 Off We will evaluate our scaling method using existing convnets, but in order to better demonstrate the effectiveness of our scaling method, we have also developed a new mobile size baseline, called efficientnet. As a result, lightweight convolutional networks are emerging as a means to reduce the model complexity, and most of these networks strike a balance between accuracy and computational power (howard 2017; sandler et al. 2018; tan and le 2019, 2021). In this video, we present our kdd 2025 accepted paper, "fully quanvolutional networks for time series classification." we address key limitations of existing quanvolutional algorithms, such as scalability and modularity, and propose a new and improved approach that works on both univariate and multivariate 1d time series data. Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on scaling up mobilenets and resnet.

Efficientnet Rethinking Model Scaling For Convolutional Neural In this video, we present our kdd 2025 accepted paper, "fully quanvolutional networks for time series classification." we address key limitations of existing quanvolutional algorithms, such as scalability and modularity, and propose a new and improved approach that works on both univariate and multivariate 1d time series data. Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on scaling up mobilenets and resnet. Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on scaling up mobilenets and resnet. We will evaluate our scaling method using existing convnets, but in order to better demonstrate the effectiveness of our scaling method, we have also developed a new mobile size baseline, called efficientnet. Powered by this compound scaling method, we demonstrate that a mobile size efficientnet model can be scaled up very effectively, surpassing state of the art accuracy with an order of magnitude fewer parameters and flops, on both imagenet and five commonly used transfer learning datasets. Goyal et al. trained and validated a supervised deep learning model capable of dfu localization using faster region based convolutional neural network (r cnn) with inception v2. their method demonstrated high mean average precision (map) in experimental settings.

Efficientnet Rethinking Model Scaling For Convolutional Neural Networks Based on this observation, we propose a new scaling method that uniformly scales all dimensions of depth width resolution using a simple yet highly effective compound coefficient. we demonstrate the effectiveness of this method on scaling up mobilenets and resnet. We will evaluate our scaling method using existing convnets, but in order to better demonstrate the effectiveness of our scaling method, we have also developed a new mobile size baseline, called efficientnet. Powered by this compound scaling method, we demonstrate that a mobile size efficientnet model can be scaled up very effectively, surpassing state of the art accuracy with an order of magnitude fewer parameters and flops, on both imagenet and five commonly used transfer learning datasets. Goyal et al. trained and validated a supervised deep learning model capable of dfu localization using faster region based convolutional neural network (r cnn) with inception v2. their method demonstrated high mean average precision (map) in experimental settings.

Comments are closed.